COOPERATION MODEL

ARTIFICIAL INTELLIGENCE

PRODUCT ENGINEERING

DevOps & Cloud

LOW-CODE/NO-CODE DEVELOPMENT

INDUSTRY

FRONTEND DEVELOPMENT

CLOUD DEVELOPMENT

MOBILE APP DEVELOPMENT

LOW CODE/ NO CODE DEVELOPMENT

EMERGING TECHNOLOGIES

AI is moving faster into every industry, from creative work to the core business areas, and inventory management is no exception. Industry reports show it could reduce inventory management costs by 20-costs

In layperson terms, you can use AI as a layer between your raw data and the end-user. For example, you could build an AI knowledge base. When an employee needs stock info, instead of reaching out to the manager for every silly piece of information, they just get information from the AI-powered system. It’s like giving everyone a direct hotline to the data they need.

This is a very basic example of AI. However, there are lots of claims being made. In this breakdown, we will see how and where AI is making an impact in inventory management, and where it still breaks down.

The most impacted areas by AI are marketing, sales, and customer service,e where more than 70% of professionals use AI in their everyday work.

AI in inventory enables processing data at a scale and speed humans can’t. It efficiently converts datasets into insights and executes tasks with extreme precision. This narrows the manager’s role from data processor to decision-validator. The system handles the calculation, and the human handles the context in this setup.

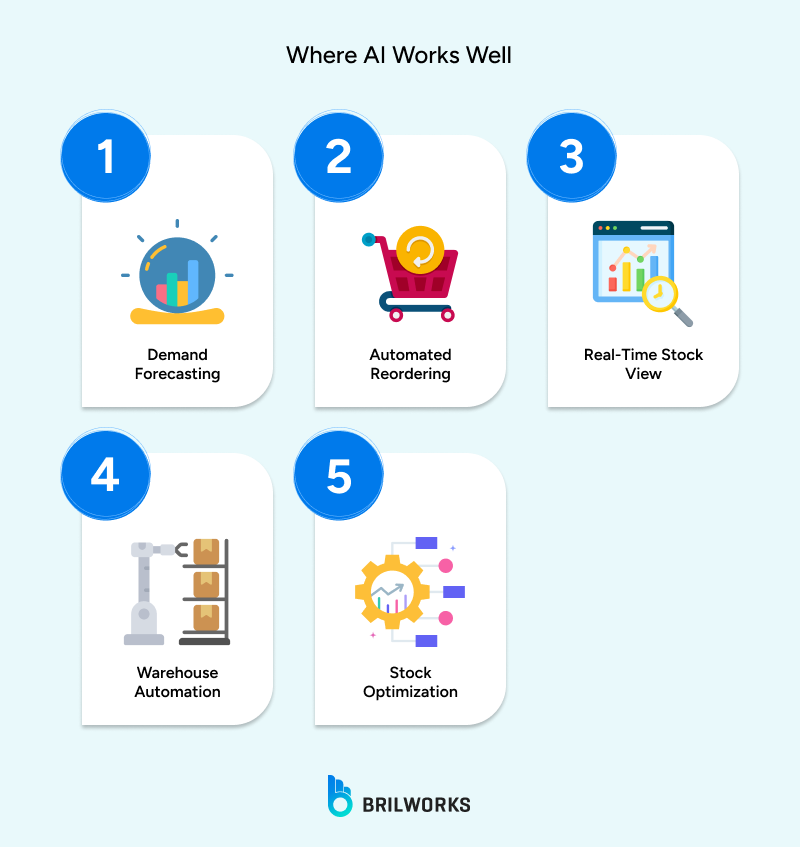

These are the core areas where it is delivering measurable results.

This is the most popular application of AI in inventory management. AI is excellent at processing data at an unimaginable speed. It gives statistically-driven forecasts that you can use to address excess inventory and stockout challenges. The forecast can further be used to decide optimal quantities.

For warehouse optimization, it can be integrated in a way that enables it to retrieve data related to orders and product dimensions, allowing it to algorithmically assign optimal storage locations. This is one of the ways to optimise warehouse operations using AI.

Anomaly detection is one of the popular areas where AI is effective, as it can assess activities round-the-clock. In such a case, when patterns are unusual, it can flag unusual activities.

Research on cloud and security applications shows an accuracy of threat detection of ~95 %, with mean time to detect (MTTD) and mean time to respond (MTTR) decreasing by 60–70 % using AI approaches compared with older methods.

In a similar way, return processing is where it can be used. If data is clean and structured, AI can simplify reverse logistics further. Large retailers like Walmart use AI to optimize routing for returned goods.

By integrating natural language processing with inventory systems, you can build a system so that employees can ask questions in plain language, such as stock availability. Knowledge-based systems are very effective when data is clean and centralised.

There is too much hype around AI adoption across business operations, including inventory areas. But AI stumbles in where data is not structured, clean, and centralised. AI operates entirely on data.

For example, as a seller, if your process relies on a verbal agreement with a supplier, that intelligence is invisible to the system.

Another one is AI's forecasts, but when you need to break the pattern (like launching a radically new product), it cannot factor in what has never happened before.

Achieving the "perfect" forecast is only possible when you have perfect, unified data from every corner of your business.

This is an immense effort. It costs millions to build this kind of infrastructure. The ROI isn't just in the algorithm; it's in the years of systems and process overhaul needed to feed it. Therefore, adoption is high, but measurable success is narrow.

Research indicates that roughly half of all AI projects actually make it into production, with the journey taking an average of eight months. Even more telling, an estimated 30% of Generative AI proofs-of-concept are abandoned due to unclear value, data issues, and unmanaged costs.

Experimentation is easy; creating a repeatable factory for data, evaluation, and governance is hard.

In practice, people use AI outputs they don't fully trust. A stark example is in software development, where surveys show that while a significant portion of new code is AI-generated, most developers do not fully trust it, and only about half consistently verify it before use.

This leads to a silent injection of risk. The tool meant to accelerate work instead creates downstream churn and compromises quality.

The ease of use creates a major security crisis. Employees routinely feed sensitive company data, such as customer details, financials, and proprietary code, into unmanaged, personal AI accounts.

Track where your team spends the most. The best first target is often demand planning for your top 20% of SKUs. These items have the most historical data (giving AI something to learn from) and the highest financial impact (making the effort worthwhile). Ignore new or erratic products for now.

The first investment isn’t in AI software; it’s in your data’s hygiene and accessibility. Before any vendor demo, answer:

Can you pull clean, historical stock levels, sales, and lead times for those key SKUs into one spreadsheet? If not, your initial project is a data cleanup, not an AI deployment. This step separates realistic plans from fantasy.

When evaluating tools, stop looking at features. Start by demanding from vendors: “Show me a benchmark report for a business like mine.” Then, run a controlled, parallel test. Use AI to generate forecasts or reorder plans for one product category, but do not let it execute.

Compare its predictions and suggestions to your existing process over 8-12 weeks. The goal is to measure accuracy and practicality, not technical sophistication.

Design the human role first. Before launch, define the clear exception criteria that will trigger a human override—for example, “If the AI suggests an order quantity that deviates by more than 40% from our historical average, flag for review.” This ensures the system augments judgment rather than replacing it blindly.

The initial success metric should be time saved and decisions accelerated for your planner or buyer. If the AI tool doesn’t reduce their daily administrative workload within the first quarter, it has failed, regardless of its long-term forecast accuracy.

Tangible time savings create internal advocacy; abstract ROI projections create skepticism.

Pick one repetitive, high-volume decision like setting weekly order quantities for a stable product line.

Apply AI there as a behind-the-scenes recommendation engine. Validate its output against human judgment for one full business cycle.

You haven’t “launched AI”; you’ve started a structured test to see if it can be a competent assistant. Everything scales from that point of proven, tangible utility.

While debates continue about its ultimate value, one fact is clear: it reliably automates a growing list of routine tasks. Today, competing without it is increasingly difficult.

The integration of AI into inventory management is no longer a question of if but how. Its role has solidified as a powerful, analytical engine designed to eliminate guesswork and automate high-volume decisions.

However, the journey from adoption to impact is where most initiatives falter. Success does not come from the technology alone, but from the often-unseen foundation of clean data, integrated systems, and deliberate process redesign.

Ultimately, AI in inventory is not about replacing human judgment but about arming it with better intelligence. The competitive advantage will go not to those who experiment the fastest, but to those who execute with the most discipline.

Get In Touch

Contact us for your software development requirements

Get In Touch

Contact us for your software development requirements