COOPERATION MODEL

ARTIFICIAL INTELLIGENCE

PRODUCT ENGINEERING

DevOps & Cloud

LOW-CODE/NO-CODE DEVELOPMENT

INDUSTRY

FRONTEND DEVELOPMENT

CLOUD DEVELOPMENT

MOBILE APP DEVELOPMENT

LOW CODE/ NO CODE DEVELOPMENT

EMERGING TECHNOLOGIES

Modern products are moving into a world where users expect machine intelligence. AI powered chatbots with jaw-dropping conversational skills, now seem to be an old idea due to the emergence of agentic tools.

AI is being integrated across different product development stages, including pre-to-post development. As Satya Nadella put it, today’s apps are basically “CRUD and business logic,” and AI is about to take over that logic.

End users now expect digital products to be highly-dynamic, adaptive to users' needs in real-time. To build these kinds of apps, backend engineers need to put a lot of effort. AI makes it relatively easy to build logic and create a flexible, intelligent system working behind the scenes.

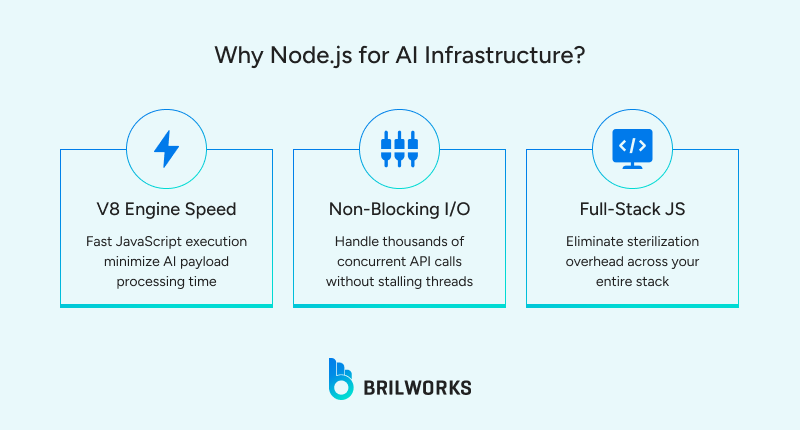

Node.js, a favorite choice for developers in web development projects, fits this environment. The reason being it gives you strong concurrency handling and a clean JavaScript or TypeScript stack from end to end. But you do not get the benefits automatically. You have to build the backend with a tight focus.

Most teams fail at the first step. They try to put too many AI features into the MVP. That leads to unnecessary engineering work. Pick one AI capability that has a direct measurable use case. Keep it small. Keep it scoped. Examples include:

Classifying new support tickets into categories

Scoring leads based on form inputs

Summarizing meeting notes

Detecting anomalies in transaction logs

You do not need a complex model for the MVP. You need a feature that proves value. When you choose the feature, define what success looks like. For example:

Prediction must respond in under 300 ms

Accuracy must reach a baseline target

The feature must support a defined number of requests per minute

If you do not choose a tight scope, everything else becomes noise. AI backends live or die by clarity.

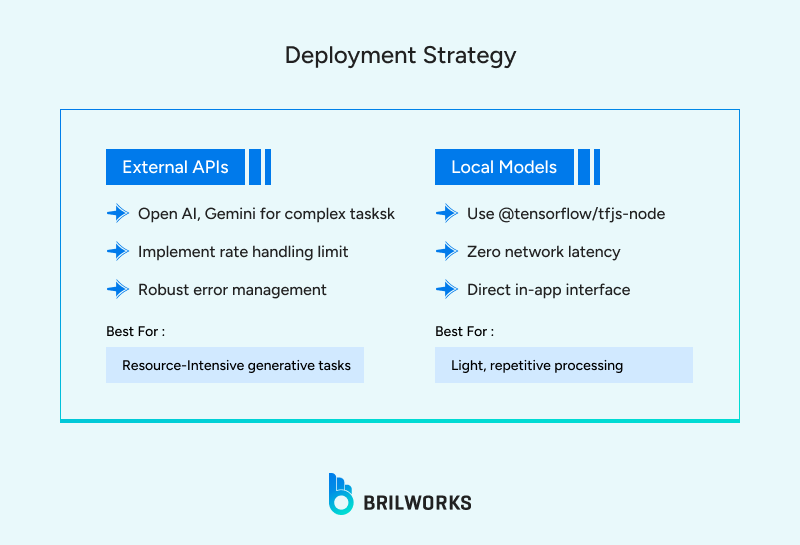

This is one of the most important decisions for an AI MVP. You have two paths. Each path changes your architecture and cost pattern.

Most generative tasks and large models should run outside your system. There is no reason to self host large language models in the MVP phase unless you need control over the weights.

The benefits of using APIs from OpenAI, Gemini, or Anthropic is it will eliminate maintenance costs. Plus, the companies provide constant upgrades, enabling you to experiment faster.

However, this approach introduces constraints that you cannot ignore:

You must handle API rate limits

You must implement strong error management

You must build retry rules that do not block the event loop

Latency is tied to the network

When the model is lightweight enough, you can run it directly inside your Node.js environment. This approach works well for small classification tasks, basic clustering logic, compact text-processing models, or even simple numeric predictors. It keeps everything local, giving you more control over how the model interacts with your backend.

Node.js already supports this setup through mature ML runtimes like @tensorflow/tfjs-node, onnxruntime-node, and lightweight utilities such as ml.js. These libraries give you enough power to keep small models fully inside your backend.

But you have to plan for CPU usage. Node.js cannot run heavy operations in the main thread. If you do, the event loop locks and every user feels the slowdown.

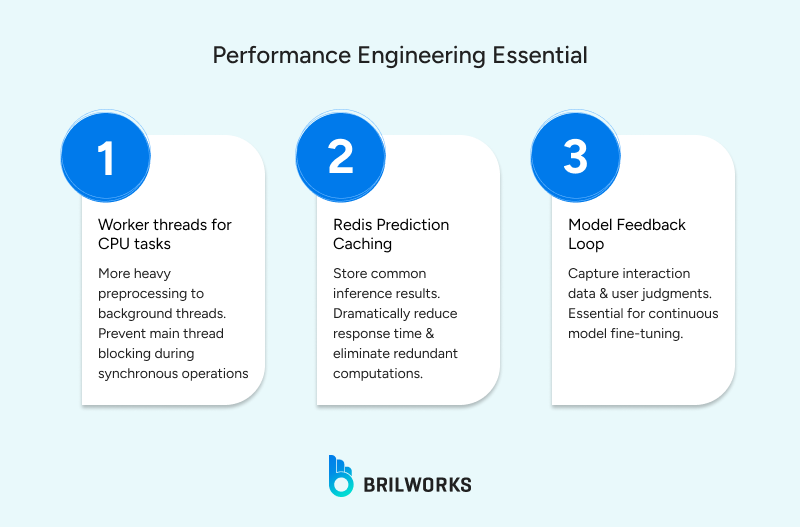

You cannot build an AI backend in Node.js if you ignore thread behavior. AI workloads often involve CPU work. If you run those operations in the main thread, your API crawls. Below are the techniques you can use to manage this problem properly.

Worker Threads allow you to run CPU work without blocking the event loop. They are ideal for:

Data preprocessing

Model loading

Model inference for medium sized local models

Feature extraction

Complex scoring calculations

In this setup, the main thread handles HTTP requests. While, heavy tasks get passed to a worker and then the worker sends results back when the computation is done. This keeps the API responsive. If you plan to scale, you can create a pool of workers.

Caching is one of the fastest ways to cut latency and reduce cost. When many AI calls are repetitive, you can implement cache predictions using Redis.

A Redis layer lets you store the computed result. When the same request arrives, you serve the cached value instantly. This can save hundreds of milliseconds per call and reduce billable API usage.

Add clear rules:

Define cache keys based on structured input

Set TTLs to remove stale data

Track cache hit rates

Simple caching can reduce load in a dramatic way. It also gives your MVP more stability from day one.

Most AI MVPs fail after launch because there is no way to improve the model. If you do not track user judgments, you cannot tune the model. If you do not track errors, you cannot diagnose drift. Your system should capture:

Input sent to the model

Output returned

User behavior after the prediction

Explicit feedback (if available)

Model confidence scores

All of this only becomes useful if you store this in a structured format. A simple schema with:

request_id

user_id

timestamp

input

output

feedback

correctness_flag

This gives you the data to retrain or fine tune models later. You also gain visibility into where the model fails. One common mistake is trying to store everything without structure.

When traffic spikes, a backend that cannot adapt is going to struggle fast. Containerizing your Node.js environment with Docker keeps is an excellent way to prevent environment drift and makes the build process reliable.

Upon containerization, deploy on a platform that handles autoscaling properly. Lambda, Cloud Run, Kubernetes, and DigitalOcean App Platform all work well.

Here is a practical reference architecture that works for most AI MVPs built with Node.js.

The API layer is where requests enter the system, so a lightweight framework like Express or Fastify works well for routing and input validation. This layer should avoid heavy processing and hand off any demanding work to the worker threads. It also serves as a natural place to capture metrics, since it sees every request and response.

The AI service layer works best when each model or external API sits in its own module, which keeps the codebase easier to reason about. Clear boundaries prevent business logic from leaking into model operations.

This layer also handles practical concerns like retry logic for unstable calls, timeouts for slow responses, and any hooks you use for caching so the rest of the system stays simple and predictable.

Worker threads give the system a separate space to handle tasks that need more compute power. A pool of workers keeps the load balanced and keeps the app from slowing down.

Each worker initializes the model once at startup so inference runs without setup delays. Heavy operations run inside these workers, and the main thread receives the processed output in a clean, structured format.

The caching layer supports faster responses and lower compute load, and Redis works well for this because it can store predictions and return them instantly for repeated inputs.

Using input hash keys helps match incoming requests to cached results. TTL rules keep the cache from holding stale data. Observing hit patterns shows how much the cache is contributing and where adjustments might improve performance

A feedback pipeline helps the system learn from real usage, so a logging service is useful for capturing each prediction and the context around it. This data can then move into a database or object storage where it stays organized and easy to review.

Over time, the stored records become the source for fine tuning, since they show how the model performed and where adjustments are needed.

For deployment, the priority is stability, so using a Docker image helps keep the environment the same across every machine. Once that foundation is in place, a simple CI pipeline gives you a reliable way to move code from development to production without surprises.

AI workloads can shift quickly, which makes autoscaling useful since it lets the system add capacity when traffic rises. Health checks support this setup by allowing the platform to send requests only to instances that are running correctly.

This structure gives you a system that can handle the needs of a real product, not just a demo script.

Here is where most devs slip. If you want to grow fast, avoid these behaviors.

Running CPU tasks in the main thread

Calling external AI APIs without caching

Mixing business logic with model logic

Ignoring rate limit handling

Not tracking model outputs and user feedback

Loading models on every request instead of at startup

Letting retries block the event loop

Using a single worker for heavy workloads

Not versioning model responses

Relying only on logs instead of structured metrics

Your next move is to simplify the path and execute in a straight line. Start by choosing one AI feature that delivers clear value. Do not add more until you validate the first one.

After that, decide where the model will run. If it is a heavy generative task, use an external API. If it is a lightweight classifier, run it locally. Keep this decision binary to avoid spreading your architecture thin.

Once the feature and model location are set, build clean service layers around them. Separate routing, business logic, and model calls so you do not mix concerns when you start scaling.

Add Worker Threads for anything that touches the CPU and integrate Redis to keep response times predictable. This combination is not optional if you want stable performance.

As soon as the system starts producing predictions, record feedback. Track inputs, outputs, user actions, and correctness signals so you have data for adjustment later.

When the backend is stable, package the app in Docker and deploy it on a platform with autoscaling. Your AI workload will spike without warning and the system must respond on its own.

This is the sequence. Stick to it, and the MVP will hold up in real conditions instead of collapsing under load.

AI backends fail more often from poor architecture than from poor models. If you build your MVP with a strong base, you can scale features later without rebuilding the whole system. Node.js gives you the concurrency and flexibility you need, but only if you use it with intention.

Focus on one feature, one clear model path, and one stable backend pattern. Then expand based on user behavior and model feedback. This is how real AI products get built.

Get In Touch

Contact us for your software development requirements

Get In Touch

Contact us for your software development requirements