COOPERATION MODEL

ARTIFICIAL INTELLIGENCE

PRODUCT ENGINEERING

DevOps & Cloud

LOW-CODE/NO-CODE DEVELOPMENT

INDUSTRY

FRONTEND DEVELOPMENT

CLOUD DEVELOPMENT

MOBILE APP DEVELOPMENT

LOW CODE/ NO CODE DEVELOPMENT

EMERGING TECHNOLOGIES

Most conversations about artificial intelligence still orbit around Python, and for good reason. It’s rich in frameworks, beloved by data scientists, and battle-tested in research. But outside of that circle, artificial intelligence is finding a home inside JavaScript applications, running on servers, in browsers.

It started around 2016, when libraries like brain.js brought neural networks to JavaScript. And then TensorFlow.js in 2018 made it possible to run and train models directly in Node.js.

Today, Node.js has become a serious option for AI integration, not just for calling external APIs, but for running generative models, handling real-time inference, and powering multi-modal features. In 2025, the ecosystem is richer than ever, with AI capabilities sitting natively in JavaScript stacks, ready to be deployed at scale.

In this article, we will have a brief overview of how you can integrate AI capabilities into your Node.js application.

For years, the advice was consistent: build your models in Python and host them somewhere so you can call them over an API. This was working. But it was still splitting your codebase, adding another deployment to manage, and using infrastructure you did not wholly control. That’s fine when AI is the main event, but it does not work so smoothly when AI is merely a single feature in a larger product.

JavaScript changes that dynamic. You can use the same language for the browser and the backend, which means the same people, the same tools, and often the same code can handle both the interface and the AI logic. A model can run in Node.js on your server or in the browser itself, thanks to WebAssembly, WebGPU, and projects like TensorFlow.js or ONNX Runtime Web.

Running locally has other benefits. Data stays on the device, which avoids the legal and security headaches of shipping sensitive information to a third party. It also cuts down latency, so features feel faster. If you already have a Node.js codebase, you can try out AI ideas without setting up a whole separate Python environment.

This isn’t about replacing Python or pretending JavaScript has the same breadth of machine learning libraries. It’s about making AI fit into your existing stack without extra layers of complexity. For many teams, that trade-off is worth it.

TensorFlow.js isn’t just “AI in JavaScript.” For Node.js developers, it’s a runtime shift: the ability to keep model execution in the same environment as your application logic without routing requests to a separate Python service. This matters because it removes a network hop, reduces operational overhead, and makes it easier to align AI workflows with existing DevOps pipelines.

In practice, it supports two main workflows:

Leveraging pre-trained models: For example, loading an image recognition model fine-tuned on ImageNet via TensorFlow Hub and running it in-process with your API server. This is ideal for rapid deployment—no separate training infrastructure, no model hosting on an external GPU cluster.

Deploying externally trained models: You might train a BERT variant or a custom CNN in Python with TensorFlow or PyTorch, export it to TensorFlow SavedModel or TensorFlow.js format, and load it directly into your Node.js service. This keeps your serving environment unified while letting you use the best training ecosystem for the task.

The strategic value is in the flexibility. A product team under time pressure can pull from the TensorFlow.js model zoo and ship in days. An engineering team with strict compliance needs can run a custom model entirely on-prem in Node, avoiding any exposure to third-party inference APIs.

This isn’t about replacing Python for training; it’s about collapsing the serving layer into the stack you already operate, cutting out the friction of maintaining two runtimes. For teams building full-stack JavaScript systems, TensorFlow.js can turn AI from a bolted-on microservice into a native feature.

Imagine an application that scans product images and identifies what’s in them. You could wire this up in Node.js with a COCO-SSD object detection model in just a few lines:

npm install @tensorflow/tfjs-node @tensorflow-models/coco-ssd

const cocoSsd = require('@tensorflow-models/coco-ssd');

const tf = require('@tensorflow/tfjs-node');

const fs = require('fs').promises;

(async () => {

const [model, imageBuffer] = await Promise.all([

cocoSsd.load(),

fs.readFile('sample.jpg')

]);

const imgTensor = tf.node.decodeImage(imageBuffer, 3);

const predictions = await model.detect(imgTensor);

console.log(predictions);

})();Run it, and you’ll get a list of objects, their positions, and a confidence score for each. No separate AI microservice. No language hopping. Just JavaScript.

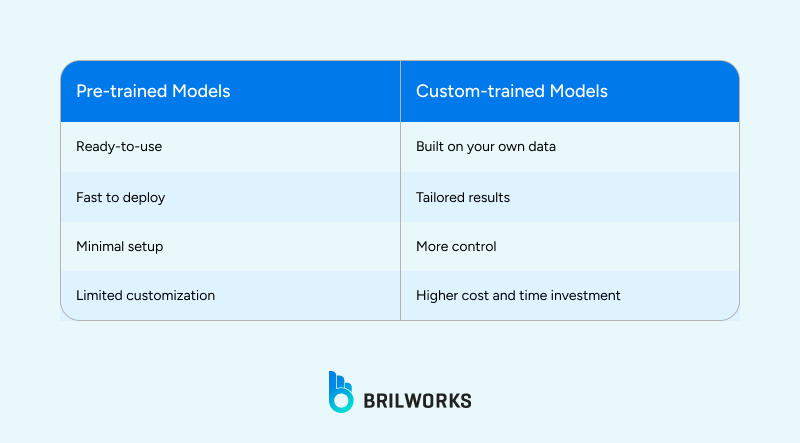

Pre-trained models are useful for getting a feature running quickly, but they rarely match the demands of a real product. In retail analytics, for example, a model trained on general product images might misclassify seasonal or niche items. In manufacturing, a generic vision model can miss subtle defects that matter for quality control. These gaps aren’t just minor, they can directly impact business decisions.

A practical approach is a hybrid workflow: train the model in Python using the libraries and tooling best suited for experimentation, then export it to TensorFlow.js for running in Node.js. This isn’t about convenience alone. It allows teams to keep the production environment unified, reduces deployment friction, and lets developers work in the language they know best while still leveraging Python’s strengths for training.

The key insight is that AI doesn’t have to dictate your stack. You can use pre-trained models where speed matters and custom models where precision matters, without splitting your infrastructure. Teams that approach it this way can iterate on features faster, respond to real-world edge cases, and maintain control over the model lifecycle.

Once you have a model ready, whether it’s pre-trained or custom-trained, the challenge becomes fitting it into your existing Node.js stack. Unlike a separate Python service, running the model directly in Node.js keeps your architecture simpler and your team aligned. You don’t need to maintain multiple runtimes, orchestrate cross-language deployments, or deal with API latency for every inference.

Integration isn’t purely technical; it’s also operational. You can manage versioning, monitor performance, and roll out updates in the same workflow you already use for the rest of your application. Libraries like TensorFlow.js let you run inference directly in the server process or even in the browser, which opens possibilities for real-time features without extra backend infrastructure.

The practical takeaway is that AI can become a first-class citizen in your stack rather than an external bolt-on. Teams that adopt this mindset spend less time firefighting deployment issues and more time iterating on the product itself.

Teams often underestimate the cost of splitting AI and application code across different languages. In a Python-plus-JavaScript setup, every change to the AI feature can involve context switching: debugging, deploying, or even just understanding the code. Keeping everything in Node.js avoids that. Your devs stay in the language they know, your CI/CD pipelines remain simple, and bugs caused by cross-language interactions are reduced.

Running models closer to the user is another practical advantage. You can execute inference in the browser or on edge devices, which cuts network latency and keeps sensitive data local. For features like real-time suggestions, image recognition, or client-side NLP, this isn’t a convenience; it’s often a requirement.

Iteration becomes straightforward. You can treat the AI model like any other module: push updates, test behavior, and deploy without creating separate infrastructure. Small tweaks no longer require coordination with a separate team or service; the model is just another piece of your app.

In short, Node.js with AI isn’t about hype. It’s about making intelligence work within the environment you already have, letting your team move faster and experiment without the friction of cross-language deployment. The question isn’t whether JavaScript can do AI, it’s whether you want to integrate it into your workflow without creating a second stack to manage.

AI can run in Node.js, but making it reliable in a real product isn’t trivial. You need someone who understands how models behave in memory, how inference affects performance, and how to integrate them into your existing code without breaking other features.

Hiring Node.js developers with this experience means you don’t waste time on trial and error. They can structure the code, handle deployment, and keep everything maintainable. The result isn’t just AI in your app, it’s AI that works, that scales, and that your team can build on without constantly firefighting.

Get In Touch

Contact us for your software development requirements

Get In Touch

Contact us for your software development requirements