COOPERATION MODEL

ARTIFICIAL INTELLIGENCE

PRODUCT ENGINEERING

DevOps & Cloud

LOW-CODE/NO-CODE DEVELOPMENT

INDUSTRY

FRONTEND DEVELOPMENT

CLOUD DEVELOPMENT

MOBILE APP DEVELOPMENT

LOW CODE/ NO CODE DEVELOPMENT

EMERGING TECHNOLOGIES

The WHO reports that over a billion people worldwide have a mental health disorder. Anxiety and depression affect people of all ages, backgrounds, and countries.

When COVID-19 hit, demand for mental health support grew, and even now, access to care is uneven and the gap remains. As more people use digital mental health tools, AI chatbots and wellness apps are very common sources of support. These apps make it easier to get help anytime.

These apps are now essential for both providers and patients, helping deliver better support and care. For developers building AI mental health apps for a highly regulated field requires understanding of evolving compliances, UX challenges.

We created this guide to give you a practical checklist. It will help you get started with more clarity.

Chatbots are now a regular part of mental health care. Healthcare systems now use generative AI in backend and front-end alongside human support, so help is always available. These tools are increasingly being adopted by clinicians to reduce laborious routine work such as taking notes, sending follow-ups, and setting reminders.

Modern mental health apps are clinical tools, not just wellness products. When AI influences a user’s emotions or care, the focus should shift from adding features to preventing harm. The main challenge is setting clear limits on what the system should and shouldn’t do.

Before picking a model, clearly define what it’s allowed to do. Large language models are good for guided reflection, psychoeducation, and structured check-ins. They handle language and tone well, but shouldn’t be used for diagnosis, risk assessment, or crisis intervention.

Technical tip: Set this boundary in your system’s routing logic. Use a simple rule engine to figure out the user’s intent before deciding if an LLM or a rule-based service should handle the request.

For conversations, use compliant APIs like Azure OpenAI or Claude, and set strict limits on prompts. For risk detection, like spotting self-harm or crisis situations, don’t use LLMs at all. Make sure you can explain every decision you make.

Tip: Node.js is a popular choice for backend development of healthcare apps. It is excellent for real-time, heavy tasks. Developers also use learning models like logistic regression or rule-based keyword systems for risk detection,

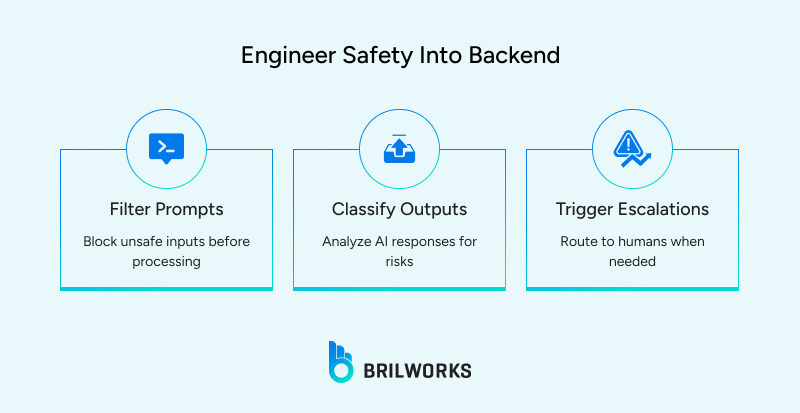

Every AI interaction should go through a dedicated policy enforcement layer to filter prompts, classifies outputs, and triggers escalations. If your system can’t block an AI response in real time, you don’t have a real safeguard. Enforce Human-in-the-Loop with System States

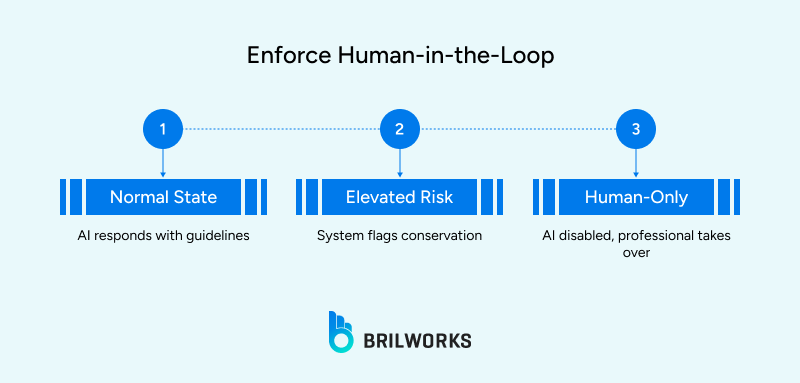

Human oversight must be enforced. Implement a clear conversation state machine (e.g., normal, elevated risk, human-only). When a session enters a restricted state, the system must automatically disable AI responses and route the conversation to a professional.

Your backend must separate services by risk domain. Isolate the AI inference service from the clinical data layer and the escalation workflow service.

A Secure, Separated Setup:

Service A: AI Inference & Moderation

Service B: User Data & Session Management

Service C: Clinical & Escalation Workflows

How you handle data shapes your system’s design. Sensitive conversations need strong encryption, unchangeable audit logs, and strict data retention rules. You must be able to trace every data access to a specific role and reason. If you can’t track who accessed what and why, your system isn’t ready for production.

Don’t just track uptime. Watch for issues like odd AI responses, missed escalation triggers, drops in risk detection accuracy, and delays in human intervention. Use feature flags and instant kill switches for AI parts. Your system should be able to stop AI interactions without shutting down the whole app.

Most mental health apps don’t fail because they lack features. They fail when something goes wrong and no one anticipated it. If your system is not designed to slow down, escalate, and hand control to a human at the right moment, it is not a healthcare product.

If you are planning to build or re-architect a mental health app, the real work is not UI polish or model choice. It is system design, safety boundaries, and compliance-ready infrastructure. Teams that get this right early avoid costly rewrites.

Get In Touch

Contact us for your software development requirements

Get In Touch

Contact us for your software development requirements