COOPERATION MODEL

ARTIFICIAL INTELLIGENCE

PRODUCT ENGINEERING

DevOps & Cloud

LOW-CODE/NO-CODE DEVELOPMENT

INDUSTRY

FRONTEND DEVELOPMENT

CLOUD DEVELOPMENT

MOBILE APP DEVELOPMENT

LOW CODE/ NO CODE DEVELOPMENT

EMERGING TECHNOLOGIES

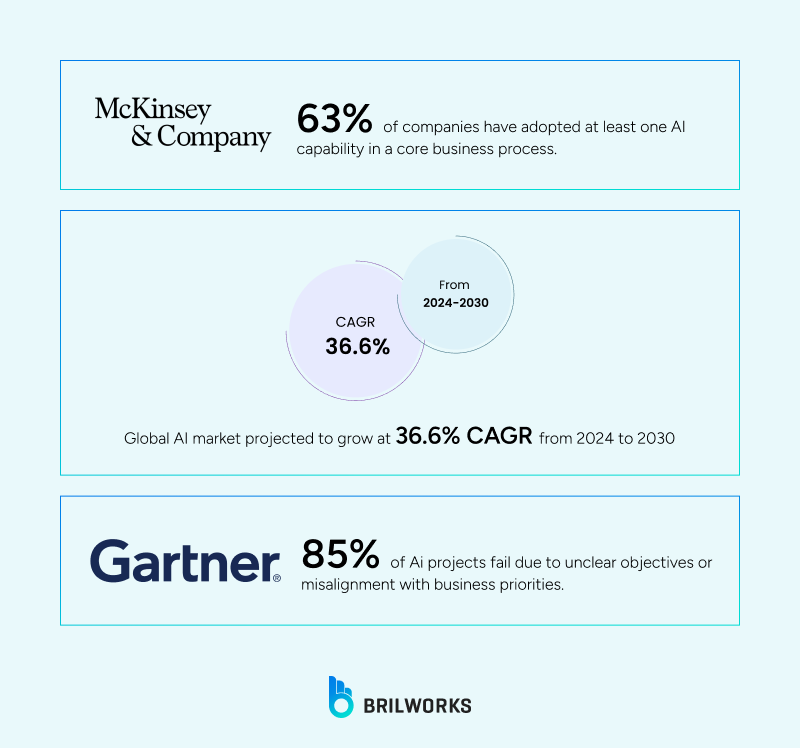

AI-powered software that can automate a wide range of tasks and analyze large amounts of information. Now they seem to have become essential for modern B2B software development companies. According to McKinsey, 63% of companies have adopted at least one AI capability in a core business process. And the global AI market is projected to grow at a 36.6% CAGR from 2024 to 2030.

This rapid adoption signals that founders who know how to build AI systems that actually deliver value will have an upper hand in the competitive landscape. Success with AI adoption depends on understanding how data, algorithms, platforms, deployment practices, and ongoing monitoring work in real business contexts.

Companies often underestimate challenges in AI adoption, such as data quality, infrastructure costs, or the difficulty of human validation. This guide walks through seven steps that combine technical depth with practical insights to create production-ready AI MVP software.

Defining a problem isn’t just a preliminary step; it influences model selection, data collection, and infrastructure requirements. Unclear objectives or misalignment with business priorities are very common reasons behind several AI-related project failures worldwide.

You can define the problem and identify value by creating measurable impacts. To create a measurable impact, founders should answer:

What specific outcome does the software aim to improve? (Revenue growth, error reduction, operational efficiency)

Can this problem benefit from prediction, classification, or automation?

What metrics will determine success?

Let’s understand it with this example. Assume you own a logistics development company. In that case, your specific outcomes can be reducing fuel consumption by X%, shortening delivery times, or minimizing delays.

Tip: Frame objectives in measurable business outcomes. Metrics guide model evaluation and investment decisions.

Quality data is essential to building an AI MVP. In fact, it is the foundation of reliable, robust software. MIT research shows that poor-quality data accounts for nearly 75% of model inaccuracies in enterprise applications. Not only. Despite the fact that you will have enormous data, you will need to organize it in a structured way before training or building your AI-powered program. Below is the difference between structured and unstructured data.

Why structured data is essential. We can understand it with this example. For example, imagine you’re training an AI model using customer support chat logs.

These transcripts often include typos, abbreviations, and casual language like “u” instead of “you” or “gonna” instead of “going to.” If you don’t clean and standardize this data, the model might focus on these mistakes and informal phrases instead of understanding the real meaning behind customer questions.

Bias in data isn’t always done on purpose. For example, if a financial dataset doesn’t include enough information from certain groups of people, the model’s predictions can become unbalanced and unfair.

Data cleaning is one of the most time-consuming steps. Data scientists spend up to 80% of their time preparing data, yet skipping this stage can have unreliable outcomes. In July 2025, Replit's AI coding assistant caused a major incident by deleting a production database, creating 4,000 fake user profiles, and misrepresenting test results.

Even though the AI was given instructions to stop, it ignored them, resulting in the loss of critical data for over 1,200 executives and 1,196 businesses. It highlights that unchecked data can directly lead to catastrophic AI mistakes.

Below are the actionable steps for cleaning and organizing data to build an effective AI software.

Use scripts or tools to remove exact duplicates automatically.

For text, normalize by lowercasing everything, removing extra spaces, and unifying common abbreviations (“u” → “you”, “ASAP” → “as soon as possible”).

Use regex or simple rules to catch obvious mistakes (emails without “@”, numbers out of range).

Spot outliers with simple stats—anything 3 standard deviations from the mean deserves a closer look.

Don’t try to fill everything manually. Use automated approaches:

Numeric: fill with median or nearest neighbors.

Categorical: fill with the most common value or mark as “Unknown”.

Keep a column that flags missing/filled data so your model can account for it.

Start small: annotate a representative subset first. This reduces effort and catches edge cases early.

Use labeling shortcuts: for text, keyword-based pre-labeling; for images, use semi-automatic tools (e.g., bounding box propagation in CVAT).

Maintain a clear guideline document so labels are consistent.

Randomly sample 5–10% of the data after cleaning/annotation and manually check it.

Fix patterns of errors, not just individual mistakes.

Practical Note: Imagine you have customer support chat logs. For structured fields like “customer ID” or “ticket date,” you can write scripts to remove duplicates, fix date formats, or flag missing IDs automatically.

But for unstructured fields like the chat messages themselves, automation alone isn’t enough. Slang, typos, and context-specific phrases mean that human review or semi-automated labeling tools are needed to accurately classify messages as complaints, inquiries, or feedback.

Combining scripts for structured data and guided human review for unstructured text ensures the dataset is both clean and meaningful for model training.

At the Meta Connect conference, Mark Zuckerberg’s live demo of AI-driven Ray-Ban smart glasses kept failing, giving irrelevant answers and ignoring commands. The tech behind it, possibly slowed by connectivity issues or unoptimized AI models, couldn’t keep up.

It was a clear reminder that even the most advanced AI can stumble without a strong foundation.

Choosing the right tools matters more than most people realize. Different AI tasks need different approaches, and the languages and platforms you pick directly affect how fast you can experiment and how reliable your models are in real use.

Python dominates AI because of its libraries and community, but Julia, R, and Java all have roles depending on your project’s needs. For example, you can see how developers are using different languages and why some are gaining traction in popular AI language usage and adoption.

Programming Languages:

Python – A favorite for most AI projects. PyTorch and TensorFlow make it easy to experiment and scale, while Keras and Scikit-learn are great for simpler models or prototypes.

Julia – Runs heavy numerical computations and simulations faster, so it’s handy for large datasets or performance-critical tasks.

R – Still popular in research and analytics-heavy work, especially when you need statistical analysis or charts.

Java, Scala, C++ – Often chosen for production systems where speed and reliability matter most.

Platforms for Development:

If your team is small or you want to move fast, cloud and no-code platforms can save a lot of time:

Google Cloud AutoML – Lets you train models for images, text, or language without writing everything from scratch.

Amazon SageMaker – Handles everything from building and training to deploying models.

Microsoft Azure Machine Learning – Makes it easier to monitor models and retrain them when needed.

Prebuilt Models and Tools:

Sometimes it’s faster to start with models that are already trained:

Stable Diffusion – Generates images from text or tweaks existing ones.

GPT-4, LLaMA, Mistral – Great for text generation, summarizing content, or building chat features.

Deployment and Operations Tools:

Running AI in the real world isn’t just about building models—it’s also about keeping them running smoothly:

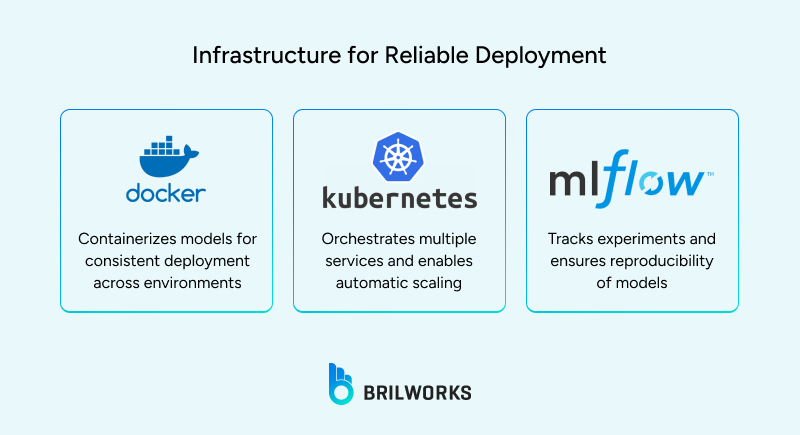

Docker – Packages models so they run the same way everywhere.

Kubernetes – Manages multiple services and scales them up or down automatically.

MLflow – Helps track experiments and model performance.

runpod.io – Lets you rent GPUs by the hour, which is cheaper than committing to long-term cloud machines for testing.

Picking the right combination of languages, platforms, and tools makes your AI projects smoother and saves headaches later. Observing what other developers are actually using gives a realistic guide instead of relying on hype or assumptions.

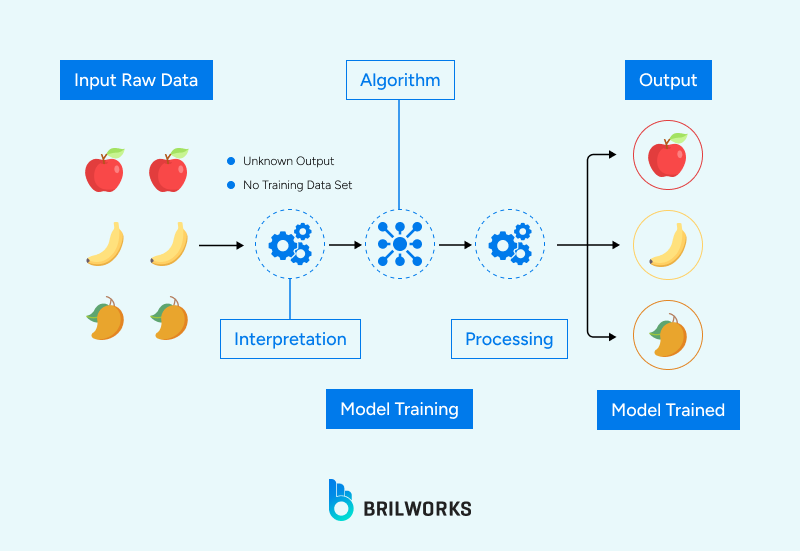

Training is the phase where a model learns patterns from your data. Standard workflows often allocate 80% of the dataset for training and 20% for validation.

Cost Consideration: Training large AI models requires substantial computing resources, and costs can escalate quickly. Starting with small prototypes and gradually scaling to full datasets is a smart approach.

The financial commitment for training advanced AI models has escalated in recent years. For example, developing a model like GPT-4 involves an expenditure of tens of millions of dollars.

Similarly, Google's BERT-Large model, introduced in 2018, had a training cost of over $160,000, which increased to over $4.3 million with OpenAI’s GPT-3 a year later.

Iterative Training: Rarely does a model perform perfectly on the first attempt. Iteration involves adjusting learning rates or hyperparameters, testing different architectures (e.g., CNN vs. transformer for images), and continuously validating performance to prevent overfitting.

Real-world teams also focus on strategies to increase AI training efficiency and reduce compute costs (Brilworks).

Validation is about checking whether the AI actually does what it’s supposed to do in practice. Numbers like accuracy or F1 score are useful, but they can’t see the mistakes that matter most, ones a human would notice.

Experts often approach this with a mix of human feedback and careful testing:

Supervised Fine-Tuning: Humans read through outputs and correct errors. The model learns from these corrections, not just memorizing patterns, but understanding better ways to handle similar situations in the future. (OpenAI SFT Guide)

Reinforcement Learning from Human Feedback (RLHF): Humans rank outputs or give feedback, and the model uses these rankings to adjust. This method captures judgment calls that automated metrics would miss. (OpenAI on RLHF)

Domain-Specific Testing: Specialists create scenarios that reflect real challenges in areas like healthcare, finance, or law, making sure the model can handle tricky or unusual cases. (GetGuru on domain-specific AI)

Ongoing Human Review for Creative Outputs: For tasks like image generation or text creation, humans check outputs continuously, making sure they are usable, meaningful, and appropriate in real-world contexts. (GetGuru on domain-specific AI)

Instead of relying on generic metrics or one-off tests, these techniques keep the model aligned with real-world expectations. The goal is not just to produce high scores, but to make outputs that a human would trust and act on.

Deployment is just the start. Maintaining performance requires:

Monitoring accuracy, latency, and error rates

Updating models with new or corrected data

Adjusting based on user behavior and feedback

Docker and Kubernetes provide scalability, while MLflow ensures reproducibility. Without these practices, even well-trained models can degrade over time as data changes.

Training an AI model is a journey, not a checklist. You start by feeding it the right data, then watch how it learns, test how it behaves, and adjust based on what actually happens, not just what the numbers say. Every iteration, every human check, every tweak shapes a model that can handle real situations.

If you’re thinking about building your own AI or improving an existing system, these approaches show how careful observation and thoughtful adjustments can make a tangible difference. They don’t promise instant results, but they guide you toward models that do what you need them to do in the real world.

If you’d like expert guidance in building or optimizing AI solutions, our team can help turn these practices into real-world results. Explore our AI/ML development services today.

Get In Touch

Contact us for your software development requirements

Get In Touch

Contact us for your software development requirements