COOPERATION MODEL

ARTIFICIAL INTELLIGENCE

PRODUCT ENGINEERING

DevOps & Cloud

LOW-CODE/NO-CODE DEVELOPMENT

INDUSTRY

FRONTEND DEVELOPMENT

CLOUD DEVELOPMENT

MOBILE APP DEVELOPMENT

LOW CODE/ NO CODE DEVELOPMENT

EMERGING TECHNOLOGIES

Generative AI has changed creative work in ways that seemed impossible before ChatGPT. Tasks that used to need a lot of human effort can now be done by AI, and sometimes, AI even outperforms creative professionals.

In many areas (such as coding, visual design, and writing), AI is performing at a level that rivals that of human professionals.

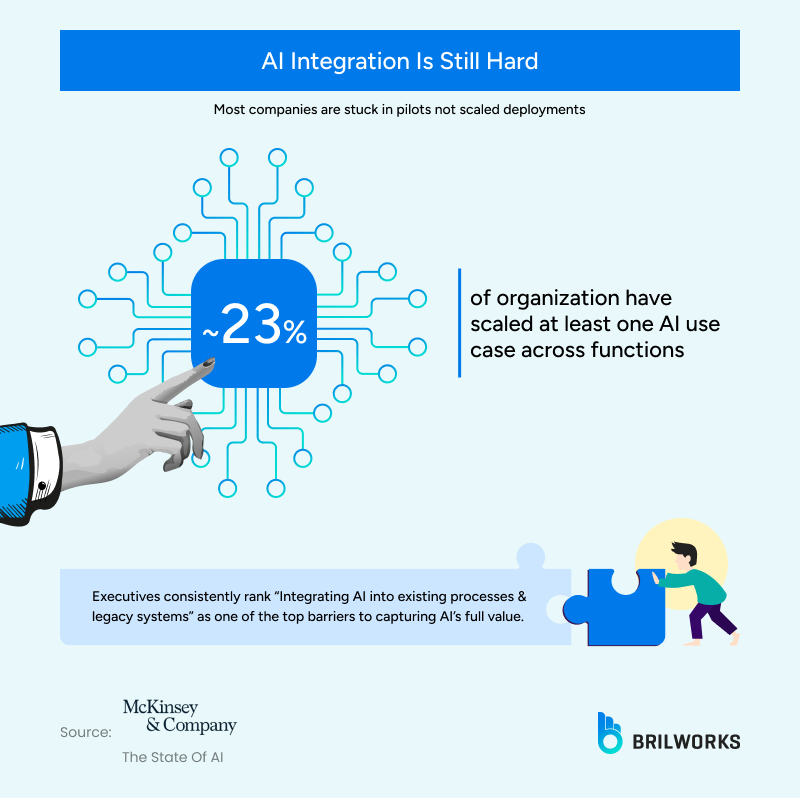

Even with all the excitement, there is a big gap between what AI could do and what it actually delivers. Research, including a 2025 MIT study, shows that almost 95% of enterprise generative AI projects do not achieve a measurable return on investment (ROI).

This has made many companies worry that the "AI Boom" could turn into an "AI Bubble." Investments are rising fast, reaching over $37 billion in 2025, which is more than three times the amount in 2024, but many organizations still struggle to use these tools at scale.

Here are some of the main challenges companies face when adopting AI.

To see why so many projects stall, we need to look at the main challenges the industry faces today.

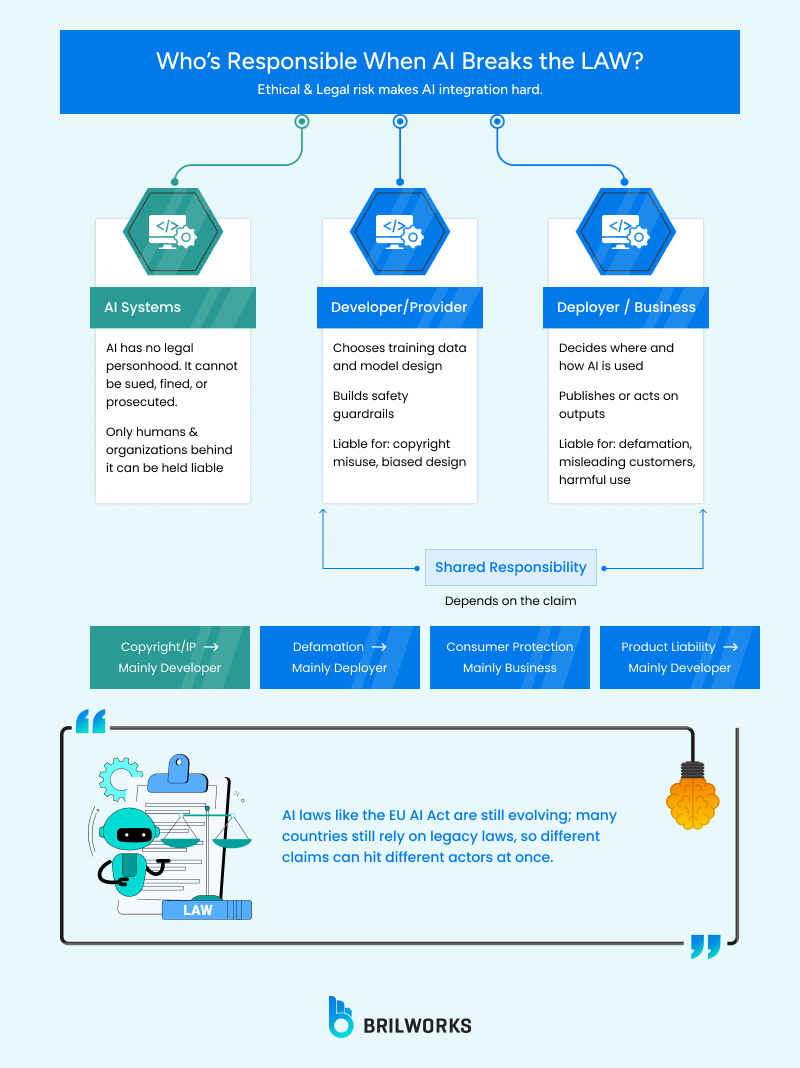

A major challenge is the unclear rules around copyright and ownership. If AI creates content that breaks regulations or infringes on a patent, it is not clear who is responsible.

AI cannot be sued or punished. It lacks "legal personhood," which means it cannot own property, pay fines, or be a defendant in a courtroom. You cannot "punish" an algorithm; you can only penalize the humans or entities behind it.

So, who is responsible: the developer or the user? In these cases, both the developer (provider) and the deployer (user or business) can be held accountable.

The developer is usually held responsible for "Input" issues, like training the AI on stolen data. Or "Design Defects" like the AI is biased or unsafe. The deployer, user, or business is held responsible for "Output" issues. The biggest challenge of AI is that different claims can affect different actors.

Copyright: Hits the Developer for training data.

Defamation: Hits the Deployer who published the AI's false statement.

Consumer Protection: Hits the Business if their AI makes a false promise or "hallucinates" a refund policy.

Product Liability: Hits the Developer if the software "engine" itself is found to be a defective product.

While the EU AI Act and various U.S. state laws have started to provide rules, they are still "living documents." Many global jurisdictions still rely on "Legacy Laws" (laws written in the 1970s-90s) to try and regulate technology from 2025. This uncertainty is the biggest challenge for businesses.

Generative AI picks up biases from its training data, since large language models are trained on public data that often contains bias. This can make existing societal prejudices even stronger.

Major generative models still have demographic and representational biasness, even after attempts to fix them. Most leading AI companies, such as OpenAI, Microsoft, Google, Meta, Stability AI, Midjourney, and Anthropic, have also faced several copyright and related lawsuits.

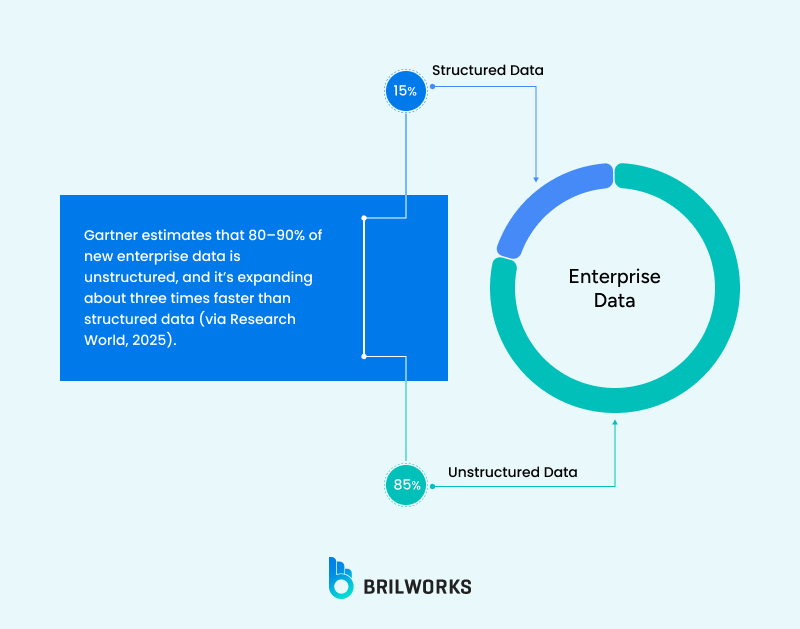

"Integration" means the difficulty of connecting modern AI with legacy (old) infrastructure. AI c. Plus, most businesses struggle to feed their unstructured data (PDFs, emails, reports) into AI models in a way that produces consistent, reliable results.

Industry reports say that 80 to 90% of enterprise data is unstructured, and more than 70% of organizations struggle to use this data effectively. This makes it hard to feed PDFs, emails, and reports into AI systems and get consistent results.

A Teradata–IDC survey of 900 senior executives found that most believe rising data complexity is making it harder to put generative AI into practice. Many expect this complexity to stay the same or get worse over the next two years.

Even the most advanced models face the "Control-Performance Trade-off." The more "guardrails" a company puts on an AI to ensure safety, the less creative and helpful the model often becomes. Furthermore, "hallucinations" (where the AI confidently states false information) remain a persistent technical barrier that prevents full automation.

There is a major paradox in today’s tech world. The IT sector is seeing historic mass layoffs, but at the same time, businesses are worried about a huge talent shortage.

On one side of the industry, mass layoffs have become a daily headline in the IT sector, reaching scales rarely seen in history. Yet, on the other side, a massive talent gap is widening. AI is rewriting the skill requirements for the modern workforce.

Modern professionals are now expected to be "AI-literate," with a surge in demand for Prompt Engineers and "Vibe Coders," individuals who can co-author code and content with AI. Recent data confirms this "AI Readiness" gap is the primary barrier to business growth:

A 2025 Protiviti survey of 1,540 board members and C-suite professionals reveals that while leaders are embracing AI, they are facing an "existential talent crisis."

According to research from IDC and Workera, over 90% of global enterprises are projected to face a "critical skills shortage" by 2026. This gap is estimated to cost the global economy up to $5.5 trillion.

For 46% of organizations, the lack of talent is the #1 barrier to AI implementation. Interestingly, the talent shortage is a bigger concern than data privacy concerns, poor data quality, or the high cost of implementation for industry leaders.

While the "hype" surrounding AI adoption shows no signs of slowing, a harsh financial reality is that very few enterprises are actually profiting from their AI investments. Currently, there is a massive disconnect between AI’s perceived potential and its realized value.

A report from BCG reveals a stark "value gap." Only about 5% of companies are currently generating returns, and nearly 60% of organizations are struggling to see any meaningful impact from their AI initiatives. This lack of clear ROI is one of the most significant "walls" for

Even with ongoing challenges like ROI, talent gaps, and legal bias, AI adoption continues to grow. We are now in a new era where problems that once took years to solve have become routine tasks. Building software, which used to be a major skill, is no longer the main focus.

Now, builders and innovators are focusing on a new area: creating the logic that powers the digital world. As AI takes over repetitive tasks by using business data, people are needed to design the rules and logic that guide it.

Legal, ethical, and financial challenges do not mean that AI is just hype. The businesses that succeed in the coming years will be the ones that learn how to handle these challenges, and, when needed, partner with experienced AI teams who can help them move from ideas to working solutions.

Get In Touch

Contact us for your software development requirements

Get In Touch

Contact us for your software development requirements