COOPERATION MODEL

ARTIFICIAL INTELLIGENCE

PRODUCT ENGINEERING

DevOps & Cloud

LOW-CODE/NO-CODE DEVELOPMENT

INDUSTRY

FRONTEND DEVELOPMENT

CLOUD DEVELOPMENT

MOBILE APP DEVELOPMENT

LOW CODE/ NO CODE DEVELOPMENT

EMERGING TECHNOLOGIES

In recent times, not only has the adoption of AI increased, but the development of AI has also picked up the wind. Today, there are millions of users available for artificial intelligence and machine learning. But how do you create AI applications as reliable as other popular chatbots? For that, you need to choose the best programming language for AI out there.

While Python does take the name, Java in AI is evolving and becoming the backbone of machine learning systems across industries. Its vast ecosystem also consists of robust AI libraries and frameworks that can help you develop efficiently.

Java, as a programming language, is particularly known for its enterprise development capabilities and robust security. The Java developers community is also growing, with new Java versions being launched every six months. Java’s three-decade focus on portability, reliability, and a vast enterprise ecosystem has made it a consistent—and quietly expanding—choice for artificial-intelligence work. According to reports published by Oracle, more than 37% of developers use Java for machine learning applications.

In this article, we will explore the role of Java in AI & ML. We’ll discuss how Java is utilized to develop intelligent systems, explore the frameworks worth knowing, and examine its role in contemporary AI architectures. We’ll also discuss how it compares with Python in practical terms, and what the future might hold as Java in machine learning continues to gain ground.

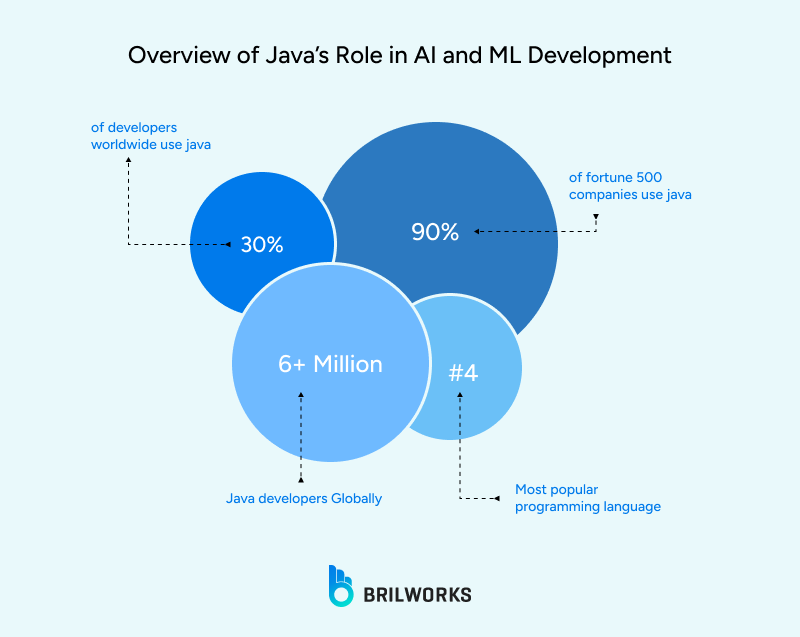

Java has been around for nearly three decades, and through time it has grown immensely. Now, in the age of AI, it is still standing because of its capabilities and features. Millions of developers worldwide use Java, and it is the 4th most popular programming language in the world. In the later section, we will dive deeper into why Java for AI is a good choice. But for now, let's get an overview of how reliable Java is in AI development.

While Python has dominated AI headlines, Java has quietly established itself as a reliable programming language for AI systems production. Originally, it was designed for enterprise applications in the 1990s. But soon many organizations realized that experimental AI models needed to scale into robust, enterprise-grade systems. Java's proven track record in handling these complex tasks required to scale AI models made it a natural choice.

The turning point came around 2015-2016 when big data platforms like Apache Hadoop and Spark gained widespread adoption. Companies and infrastructure soon found themselves with massive datasets to process, all built on Java. This created a bridge between traditional enterprise systems and the emerging world of machine learning.

Currently, Java holds approximately 30% of the global developer market, with millions of developers already familiar with its ecosystem. While Python dominates in academic research and prototyping, Java has carved out a substantial niche in production AI environments, particularly within enterprise settings.

Unlike the experimental nature of many Python AI projects, Java-based AI solutions focus on production-ready, scalable implementations that integrate seamlessly with existing business infrastructure. This positioning has made Java a preferred choice for AI and ML development.

In Fortune 500 companies, over 90% companies use Java in their technology stacks. Here are some of the most prominent examples:

While Google is widely tied with Python-based tools like TensorFlow, it also uses Java in AI workflows. Google uses Java within its Cloud Machine Learning Engine and for services such as Cloud Vision (image recognition) and Cloud Speech-to-Text (NLP tasks).

Netflix, known for its seamless user experience, relies on Java to support its machine learning infrastructure. The platform processes massive volumes of real-time data using Java-based tools like Apache Spark, Kafka Streams, and Java 8 to generate personalized recommendations.

Java plays a key role in LinkedIn’s recommendation algorithms. The platform uses Apache Mahout, a Java-based machine learning library, to suggest job listings, connections, and skills that align with a user’s profile and behavior patterns.

Within its cloud ecosystem, Amazon uses Java in Amazon Sagemaker, a managed machine learning service that simplifies building, training, and deploying ML models. Java’s robustness and enterprise-grade performance make it a dependable choice in large-scale AI workloads.

IBM’s well-known Watson AI platform incorporates machine learning models built with Java. Developers can leverage this Java-powered framework to create AI solutions across various industries, ranging from healthcare to finance, with a strong focus on accuracy and scalability.

In Java, every variable must be explicitly declared with a data type (like int, double, String, etc.) before it's used. The compiler performs type checks at compile time, not runtime, so type mismatches are caught early. That is very beneficial in AI development.

Type safety like this can reduce ambiguity in code behavior and make large-scale systems more maintainable. Clear types make it easier for teams to collaborate, especially in distributed systems or hybrid models.

AI apps, too, need to run on multiple devices. And synchronizing these devices is also a huge task on its own. Java's "write once, run anywhere" principle ensures consistent behavior across operating systems. This makes deployment of AI models seamless across cloud, desktop, and mobile platforms.

Java is known for its security. Its architecture is built in a way that can protect your AI applications from many security vulnerabilities. For example, before execution, Java bytecode is verified by the JVM to ensure it doesn't perform illegal operations. This prevents buffer overflows, stack corruption, and other vulnerabilities that could compromise AI systems.

There's also Java's security manager and ClassLoader that isolate code execution. This creates a sandboxed environment where untrusted code can run without accessing sensitive system resources.

When you launch an AI app, your main concern is not just to build a model but also to scale it. Java has features like multithreading and mature garbage collection that can allow your AI models to handle high-throughput workloads. Java also integrates well with distributed systems like:

Apache Hadoop and Spark (via Java APIs)

Kafka for real-time data streaming

HDFS and Cassandra for large-scale storage

This makes it a practical option for end-to-end AI pipelines, where data handling is just as important as model accuracy.

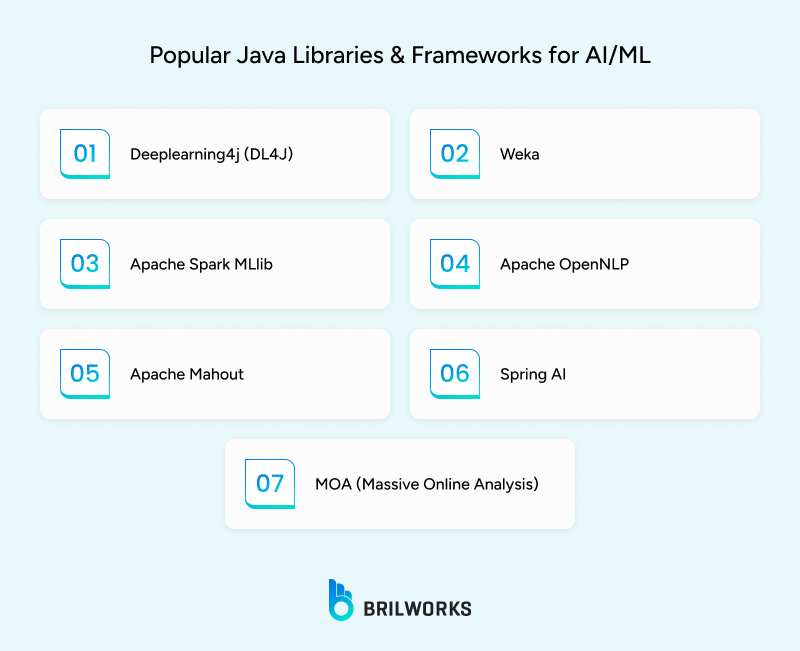

While Java might not offer as many machine learning libraries as Python, it features a focused and production-oriented ecosystem that’s trusted in enterprise settings. Some standout tools include:

Deeplearning4j – Deep learning framework for the JVM with support for GPUs and integration with big data platforms like Spark and Hadoop.

ND4J – A high-performance, N-dimensional array library (similar to NumPy for Python), used for numerical computing and powering DL4J's operations.

MOA (Massive Online Analysis) – A framework specialized in real-time learning and data stream mining, ideal for continuous model updates.

Weka – A long-established and easy-to-use machine learning toolkit that supports classification, clustering, regression, and more through a user-friendly interface and API.

These libraries aren't experimental; they’re actively used in real-world applications and supported by engaged communities, making Java a solid choice for scalable and reliable machine learning projects.

Java development ecosystem is mature, stable, and well-supported. IDEs like IntelliJ IDEA and Eclipse offer great support for AI projects. You get smart auto-complete for ML libraries, visual debuggers, and even real-time inspection inside training loops.

When multiple developers are working on different parts of the same model, code readability and structure matter, Java's strong typing and clear interfaces help teams understand and build on each other's work without confusion.

The strength of Java in AI and machine learning development lies not just in the language itself, but in the mature and evolving ecosystem that surrounds it. Java supports a wide range of use cases through a diverse set of tools. Here are some of the most impactful libraries and frameworks shaping the Java for ML and AI landscape.

DL4J is one of the only deep learning frameworks built natively for the Java ecosystem. It supports training and deploying neural networks using Java or Kotlin, and can integrate with popular tools like ND4J (for n-dimensional arrays) and SameDiff (for defining computational graphs).

Good at: Building deep learning models like convolutional or recurrent neural networks using Java. It supports distributed training via Apache Spark and runs on CPUs or GPUs with CUDA support. There's also a small model zoo for common use cases like image classification and sentiment analysis.

Where it struggles: The community is small, and updates have slowed. Many newer AI breakthroughs (transformers, diffusion models, etc.) are either missing or poorly supported. The syntax is more verbose than what you’d find in PyTorch or Keras.

Use it when: You’re deploying in enterprise environments where Java is standard, need JVM compatibility, and don’t want to rework infrastructure just to run models. It’s also a fit when you're already using Spark for data pipelines.

Weka has been around since the 1990s and is widely used for teaching and research. It provides a collection of classic machine learning algorithms with a GUI for experimenting.

Good at: Quickly testing out algorithms like decision trees, k-NN, Naive Bayes, and logistic regression. It also includes tools for data prep, feature selection, and evaluation. The GUI makes it accessible to non-programmers or for quick comparisons.

Where it struggles: All data must fit in memory, so it breaks down with larger datasets. It doesn’t support modern deep learning, and the model deployment story is weak—especially for production environments.

Use it when: You’re prototyping, teaching, or doing research with small to medium-sized datasets. It’s also a good sandbox for testing preprocessing steps or evaluating model accuracy before committing to something more robust.

MLlib is the machine learning library in Apache Spark. While Spark is written in Scala, MLlib has solid Java bindings and is often used in enterprise settings that already rely on Spark for data processing.

Good at: Building end-to-end machine learning pipelines for very large datasets. It supports classification, regression, clustering, recommendation systems, and model evaluation. MLlib is also useful when combining structured data with real-time streaming sources via Spark Streaming.

Where it struggles: Flexibility is limited. It’s not ideal for complex or custom ML architectures. Training deep learning models is outside its scope unless paired with external tools. And Spark’s distributed overhead can be unnecessary for smaller jobs.

Use it when: You already use Spark for your data pipelines and want to embed ML into that flow. It’s especially useful for batch processing on huge datasets, recommendation systems, and stream-based inference at scale.

OpenNLP is a basic Java library for natural language processing. It focuses on core language tasks, not cutting-edge language models.

Good at: Handling standard NLP tasks like tokenization, sentence splitting, part-of-speech tagging, named entity recognition, and parsing. It works out of the box and can be retrained with your own data if needed.

Where it struggles: It doesn’t support modern NLP methods—no transformers, embeddings, or contextual models. Accuracy is decent for simple tasks but falls short compared to modern deep learning NLP libraries.

Use it when: You need basic NLP functionality inside a Java application—such as tagging text fields, parsing sentences, or extracting names—and don’t want to depend on external services or Python bridges.

Mahout was one of the early Java ML libraries focused on scalable algorithms, especially in the Hadoop era. It’s still useful in specific big-data workflows but has lost ground to Spark MLlib.

Good at: Performing large-scale recommendation, classification, and clustering tasks across distributed systems. It has a math environment for linear algebra and can integrate with Apache Flink or Spark for scalability.

Where it struggles: The project has slowed down, and documentation is hit-or-miss. Compared to more modern libraries, it feels heavy and less flexible. Also, many features are now better supported in Spark MLlib.

Use it when: You’re working in a Hadoop-based stack or maintaining legacy systems that already use Mahout. It’s also viable when building large-scale recommender systems in Java.

Spring AI is a newer project from the Spring ecosystem aimed at integrating AI features into modern Java applications—especially LLMs and generative AI.

Good at: Connecting to external AI services like OpenAI, Azure OpenAI, and Google AI. It handles prompts, embeddings, and vector store queries, with support for tools like PostgreSQL, Redis, and Pinecone.

Where it struggles: It’s not meant for training models. You’re essentially building wrappers around hosted APIs, so performance, latency, and model behavior depend on external providers.

See Also: Java Performance Tools and Best Practices Every Developer Should Know

Use it when: You want to integrate LLM-based features (like summarization, Q&A, chat interfaces) into a Spring Boot app without switching to Node.js or Python. It’s a practical fit for internal tools, chatbots, or hybrid AI + enterprise applications.

MOA is built for data stream mining—i.e., learning in real time from continuous data, where traditional batch models don’t work well.

Good at: Building online learning models for classification, regression, clustering, and anomaly detection. It supports concept drift handling and pairs well with real-time monitoring or transactional systems.

Where it struggles: Not designed for deep learning or complex feature engineering. It assumes the data arrives in a stream and can’t be revisited, which limits some types of processing and tuning.

Use it when: You need machine learning that adapts continuously—like fraud detection in payment systems, predictive maintenance, or monitoring high-frequency data streams.

Java’s ecosystem is both deep and diverse. As organizations seek to align AI initiatives with existing enterprise infrastructure, these tools make a compelling case for using Java for ML in production-grade systems.

Today's AI systems are more distributed, cloud-native, and designed for continuous deployment and scaling. Java's enterprise heritage makes it uniquely suited for these modern architectural patterns.

Java AI applications excel in containerized environments where consistency and portability are paramount. The JVM's mature runtime characteristics make Java AI models particularly well-suited for Docker containers that need predictable resource usage and startup times.

Moreover, Kubernetes orchestration works seamlessly with Java AI services. Companies like Netflix deploy thousands of Java-based recommendation microservices across Kubernetes clusters to handle traffic during peak viewing hours. Serverless AI deployment has also become increasingly viable with Java through frameworks like Quarkus and Spring Boot optimized for cloud functions.

Java's strength in enterprise CI/CD pipelines extends naturally to MLOps workflows. Development teams can integrate AI model training, validation, and deployment into existing Jenkins or GitLab pipelines without learning new toolchains.

Furthermore, Model versioning and deployment strategies benefit from Java's mature artifact management. Teams use Maven repositories to version and distribute trained models alongside application code, enabling synchronized deployments where model updates and application logic remain consistent across environments.

Modern AI systems increasingly rely on real-time data streams for both inference and continuous learning. Java's integration with Apache Kafka enables sophisticated event-driven architectures where AI models process and respond to data in real-time.

Streaming ML pipelines built with Kafka Streams and Apache Flink allow organizations to implement continuous learning systems. E-commerce platforms use this pattern to update recommendation models in real-time as users browse and purchase, ensuring that AI predictions remain current with changing user behavior.

Java's microservices ecosystem aligns perfectly with AI-as-a-Service architectural patterns. Individual AI models are deployed as independent services that can be scaled, updated, and maintained separately from the broader application ecosystem.

API gateway patterns enable organizations to expose AI capabilities through standardized REST APIs while abstracting the underlying implementation complexity. Healthcare organizations use this approach to provide AI diagnostic services to multiple client applications while maintaining strict access controls and audit trails.

Java's platform independence becomes particularly valuable in hybrid cloud architectures where AI models need to run consistently across on-premises data centers, public clouds, and edge devices.

Multi-cloud strategies benefit from Java's consistent runtime behavior across different cloud providers. Organizations avoid vendor lock-in by deploying the same Java AI applications across AWS, Azure, and Google Cloud, using cloud-native services for scaling and management while maintaining application portability.

The theoretical benefits of Java for AI development become most compelling when demonstrated through real-world implementations. So, let's take a look at how enterprises are putting Java AI into practice.

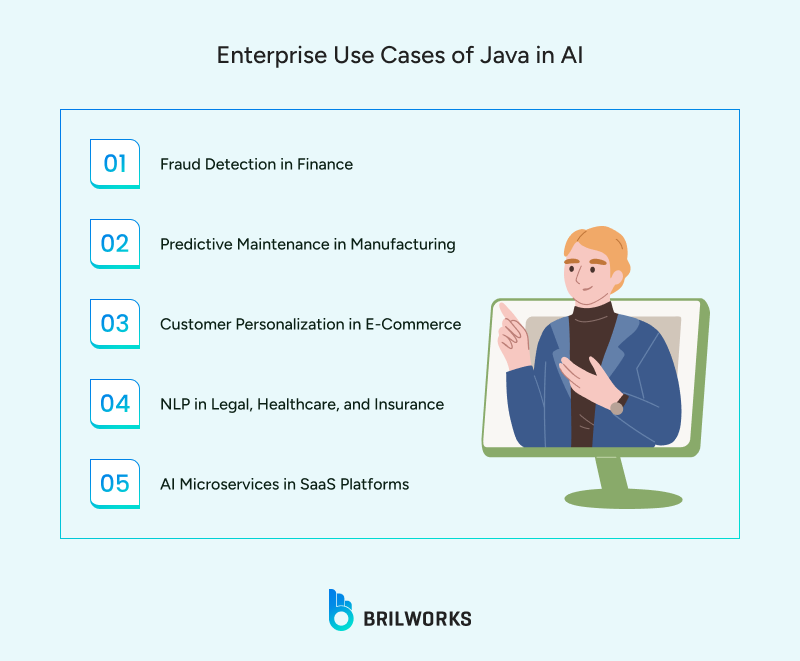

Banks and fintech companies use Java AI systems to detect suspicious patterns in real time. Libraries like Deeplearning4j integrate with big data tools (Kafka, Spark) to build scalable fraud detection pipelines.

See Also: Why Java Is Still Popular in Fintech

Java works well with IoT sensor data and streaming platforms to predict equipment failure. Tools like MOA and Mahout help companies avoid costly downtime through real-time machine learning Java models.

Java is used to power recommendation engines and dynamic pricing models, especially where performance and scale matter. Spark MLlib and Mahout are often used for collaborative filtering at scale.

With libraries like Apache OpenNLP, enterprises use Java in machine learning pipelines to process contracts, patient records, and claims at scale—extracting key entities and summarizing text with precision.

Enterprise SaaS products integrate Java for ML models into microservices for lead scoring, sentiment analysis, or smart document processing. Spring AI is increasingly popular for connecting Java apps with foundation models like GPT.

AI and machine learning continue to remodel business operations. As more tasks turn into AI automation, the development needs to become reliable and firm. That means choosing the right tech stack that aligns with your goals and vision. Python often dominates the AI conversation, but for experimental purposes, when it comes to production-ready AI deployment, Java might outperform it.

Java's resilience lies not in having the newest AI algorithms first, but in making AI accessible for enterprises. As AI technology evolves, implementing AI effectively within existing business processes gives a more competitive advantage than having the most advanced algorithms.

Here's a checklist for decision makers on whether they should use Java for AI projects.

You need production-ready systems that integrate with existing Java infrastructure

Security, compliance, and auditability are non-negotiable requirements

You're building AI systems that must scale to enterprise levels

Your team has existing Java expertise and enterprise development processes

Long-term maintainability and vendor support matter more than cutting-edge features

You're primarily doing research or experimental AI development

Rapid prototyping and algorithm experimentation are your main priorities

Your team lacks Java expertise and you're starting from scratch

Looking to build smart, scalable AI solutions in Java? Now might be the right time to hire Java developers who understand both the language’s legacy and its modern potential in AI and machine learning.

Java plays a significant role in artificial intelligence by offering a reliable, scalable foundation for building AI applications. Its strong performance, rich set of libraries like Deeplearning4j and Weka, and seamless integration with enterprise systems make Java in AI development a preferred choice for many large-scale and production-grade solutions.

The future of Java AI looks promising, especially in enterprise environments. While Python leads in research, Java continues to evolve with new frameworks like Spring AI and better support for modern AI architectures. With its strong ecosystem and growing compatibility with cloud and big data tools, Java’s presence in AI and machine learning is expected to expand further.

Yes, Java is widely used in machine learning. It powers many ML applications through libraries such as Apache Spark MLlib, Weka, and Mahout. These tools make Java for ML a robust choice for building scalable machine learning models, especially in environments that demand performance, maintainability, and enterprise-grade security.

Java offers several advantages for AI and machine learning, including high performance, strong memory management, and excellent support for multithreading. It’s also well-suited for integrating AI into existing enterprise systems, which makes Java in machine learning projects a practical and scalable option compared to scripting languages like Python.

Java can be used to build a wide range of AI applications, including fraud detection systems, natural language processing tools, recommendation engines, predictive analytics platforms, and real-time decision-making systems. Its compatibility with big data technologies makes it ideal for large-scale, data-intensive AI solutions.

Get In Touch

Contact us for your software development requirements

Get In Touch

Contact us for your software development requirements