COOPERATION MODEL

ARTIFICIAL INTELLIGENCE

PRODUCT ENGINEERING

DevOps & Cloud

LOW-CODE/NO-CODE DEVELOPMENT

INDUSTRY

FRONTEND DEVELOPMENT

CLOUD DEVELOPMENT

MOBILE APP DEVELOPMENT

LOW CODE/ NO CODE DEVELOPMENT

EMERGING TECHNOLOGIES

Mobile app development is no longer something exclusive to a few elite organizations. Today, there are platforms for everyone, and with AI in the picture, app development can often be done without writing much code at all. In fact, at a recent Meta LlamaCon event, Satya Nadella shared that AI is already handling 20–30% of the coding in many company projects. Google also made a similar statement recently. It's clear the tech world is entering a new phase.

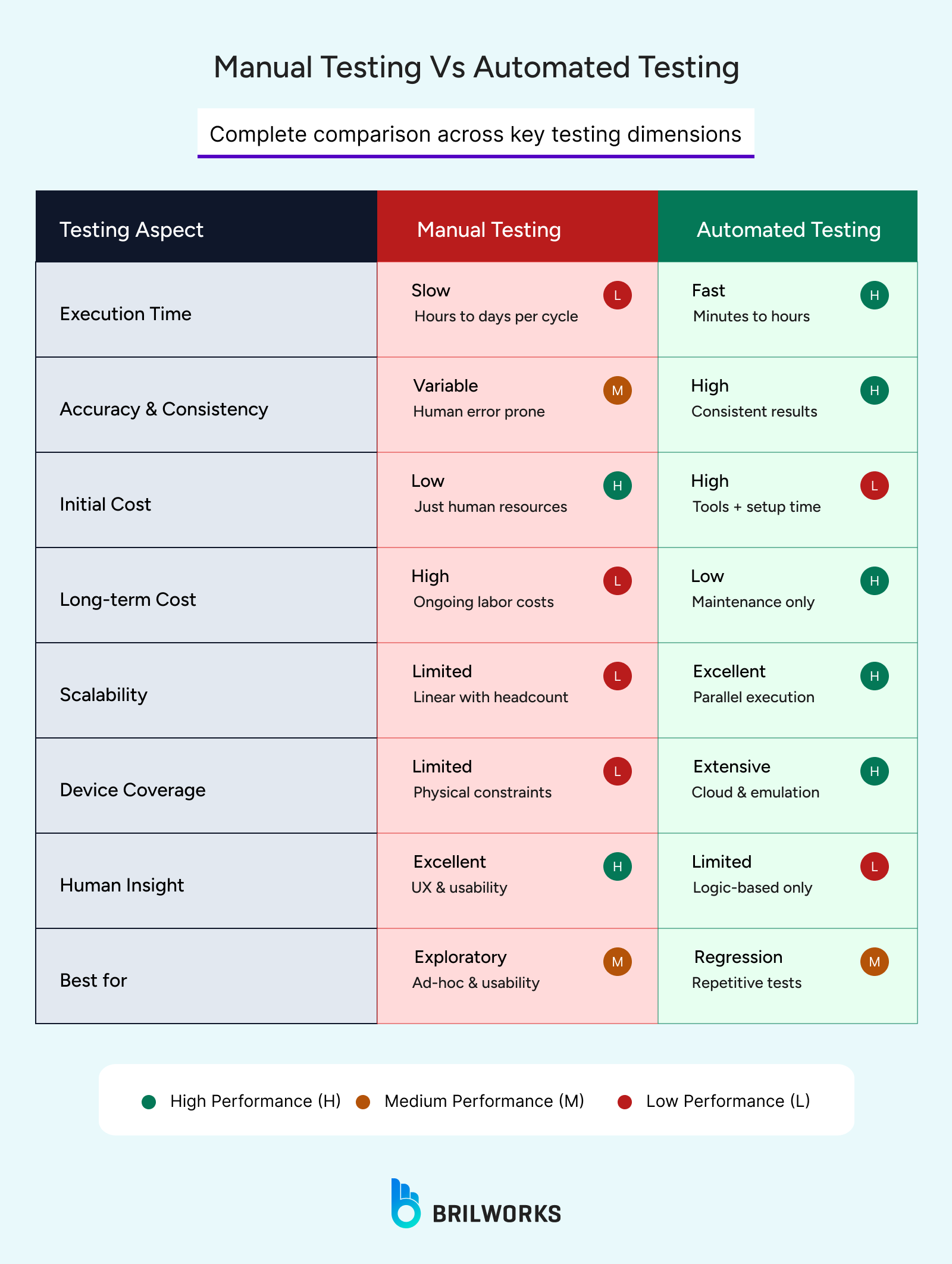

Just like mobile app development is being enhanced by AI-driven tools, the testing part is not immune to it at all. For developers, it's now crucial to understand the difference between manual and automated testing and to know which one is more effective and reliable in different situations. Without automation, it's hard to push regular updates on time. But relying fully on machine-driven, rule-based scans doesn't always make sense either.

So, let's understand how these two testing methods differ and figure out which one is better suited to your needs. We'll start with the basics of mobile testing and then move on to the differences between manual and automated testing.

There are two main approaches to mobile app testing. The first is the traditional one, where apps are tested on real devices by real humans. On the other hand, over time, many tools and platforms have been developed to make testing faster and smoother. This approach is known as automated testing.

In this method, different testing programs run in a virtual environment to simulate various devices and conditions, completing long and complex testing processes in much less time.

That said, neither method is foolproof. Manual testing can miss certain issues, while automated tools also have their limitations and may not always be effective. The reality is that human involvement is still necessary. That's why many big companies now use a blend of both manual and automated testing. This combination makes the overall process more efficient and reliable.

It's a lot like using AI tools to generate a first draft and then refining it manually to improve the final output. This hybrid approach significantly boosts both productivity and efficiency.

A human tester is typically involved in manual testing. These testers can be software developers or even end customers. They may perform tests on emulators and simulators, and real devices. Often, only paid testers install the app on their specific devices and use its functionalities.

However, the technical aspects are checked by professional quality assurance experts to ensure security and other features are working properly. Overall, automated tools are used very little in this process. While manual testing is considered more reliable, it is also a time-consuming task.

They check how the app behaves, for example, how the navigation works, whether the buttons and taps respond correctly, and how different functions operate. End users often install the app on their own phones and provide valuable feedback to the developers. At the same time, QA (quality assurance) experts use real devices, automation tools, simulators, emulators, or cloud-based environments to perform testing.

Even though apps and platforms are still used in this process, human professionals focus more on analyzing behavior and reviewing the code manually. Manual testing is considered the traditional method of testing. While automated testing has become more common, manual testing is still important in certain areas. It can't be completely eliminated because machine-tested code can sometimes fail in a live production environment.

In automated testing, there's a strong reliance on testing tools and software. In automated testing, QA professionals use a variety of tools and services to carry out testing. Automated testing is becoming increasingly popular for large-scale testing.

Although human involvement is still important, automation speeds up the overall process, which would otherwise be quite time-consuming and tedious.

In automation, the app is divided into different parts:

UI testing is done separately,

API testing requires specific tools,

Unit testing uses different sets of tools as well.

Using the right tools, automation testing can be made highly effective. Experts believe that mastering these tools and choosing the right ones can bring automated testing close to the level of manual testing in terms of quality.

In large companies, it's common to see automated testing being used for large-scale apps, allowing them to launch successful products more efficiently.

However, it's true that the initial cost of automation can be quite high compared to manual testing.

One major advantage is that automated tests can be run repeatedly, even when testers aren't available, and can continue running for long periods, which is beyond human capability.

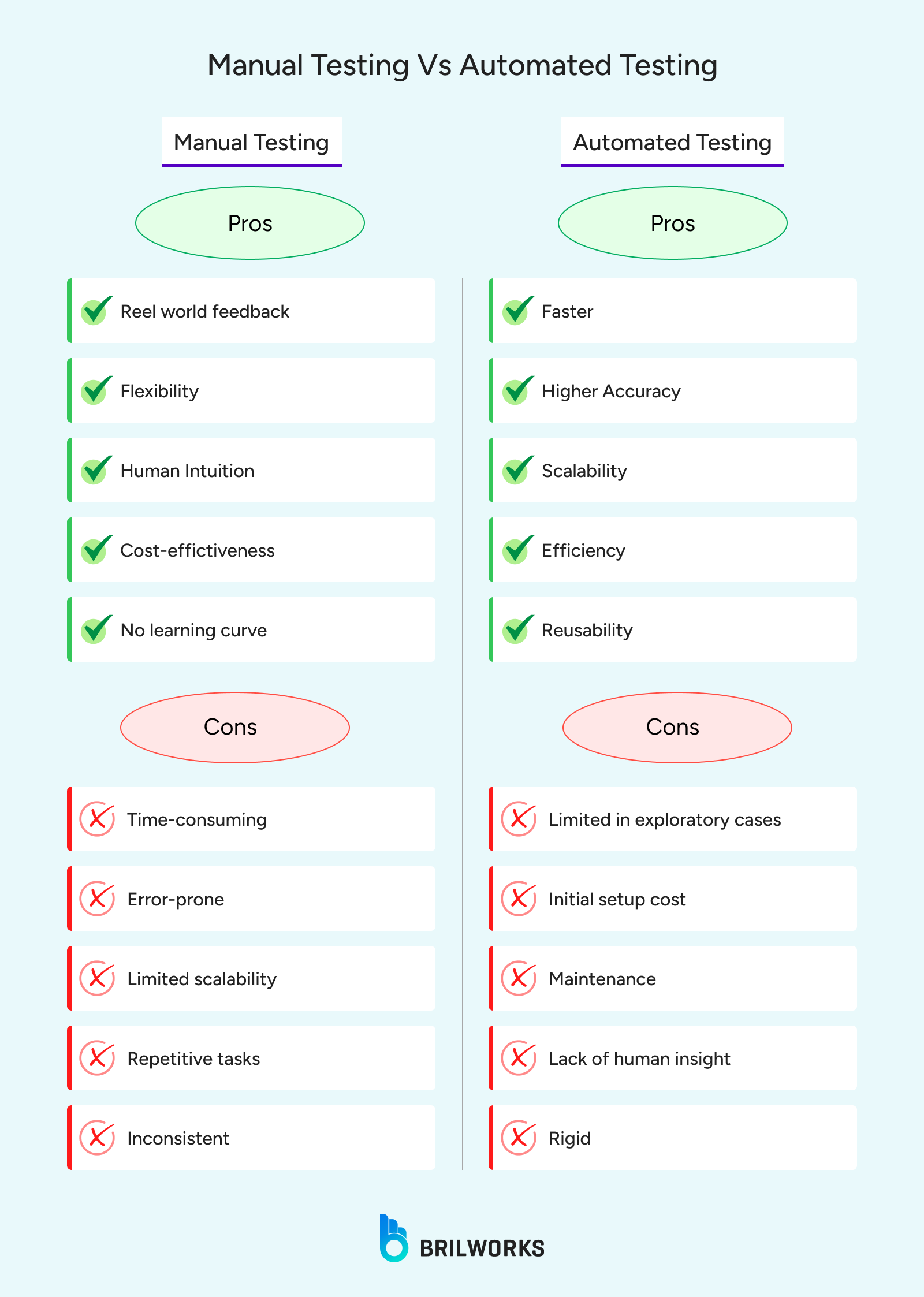

With automation taking center stage in software testing conversations, it's easy to assume manual testing is outdated. But here's the thing: many businesses still choose manual testing for valid, strategic reasons.

No matter how fast or reliable automation becomes, it lacks the curiosity, empathy, and intuition of a human tester. Manual testers notice weird UI behavior, awkward user flows, or inconsistencies that an automated script won't flag because it's not "wrong" in the code—it's just not right for a real user. That makes manual testing crucial for user experience and exploratory testing.

When product features are still shifting, test cases change frequently. Manual testing lets teams adapt on the fly without the overhead of reworking scripts. For early-stage products, rapid iterations, and discovery phases, this adaptability is invaluable.

Some apps don't have long life cycles or frequent releases. For these, investing in automation tools, scripting, and maintenance doesn't pay off. Manual testing is cost-effective and gets the job done without overengineering the QA process.

Manual testing doesn't require weeks of tool setup or specialized skills. For many QA professionals, it's a known process that can be executed quickly and effectively, especially for simple functionality or smoke tests. Sometimes, speed isn't about how fast a script runs but how fast a tester can start.

Testers doing manual QA often develop a stronger sense of product logic and edge cases. They're not just running checks—they're thinking critically about how the system behaves. This level of engagement often leads to catching nuanced bugs and providing better feedback to devs and designers.

Manual testing has earned its place. It's how many teams built their QA processes, and in the right hands, it still delivers insight no tool can. But as products scale and release cycles shrink, more companies are beginning to ask—is manual testing enough on its own?

Manual testing is thorough, yes—but it's also time-bound and effort-heavy. As your product grows, repeating the same tests with every update starts slowing things down. Automation steps are not to replace human insight but to handle what's repetitive and predictable, freeing teams up for deeper thinking.

Even the most experienced tester can miss a step. Automation ensures that the same test is run the same way every time. That kind of consistency builds confidence, especially during regression testing or continuous delivery.

The shift to automation doesn't mean abandoning manual testing. It means automating the parts that are slowing you down—things like login flows, form validations, and API calls. These are predictable, stable, and run hundreds of times. Automating them helps QA teams focus on edge cases, design issues, and overall experience.

Modern tools are becoming more user-friendly. You no longer need to be a full-stack engineer to create useful test scripts. AI-powered tools can even help build and maintain tests with less effort. The barrier to entry has dropped, and the ROI shows up quickly when you're running dozens of tests daily.

Testing isn't a binary choice anymore. As products scale, timelines shrink, and expectations rise, relying entirely on either manual or automated testing starts to show cracks. A hybrid model—smartly combining both—brings structure, speed, and insight to every phase of development.

In the earliest phase, developers move fast. Automated tests like unit tests and static analysis keep the codebase stable, while manual reviews and debugging offer a sanity check on early decisions.

Automated: Unit tests (TDD/BDD), static code analysis, build verification, code coverage reports

Manual: Developer validation, code reviews, debugging, feature and edge-case validation

Why it matters: Automation catches regressions early. Manual input adds critical judgment and context that tools can't.

This is where isolated components come together. Integration and API validations ensure that systems talk to each other properly. Automation handles consistency across environments, while manual testers look at integration logic, third-party quirks, and data flows.

Manual: API integration, database testing, third-party service validation

Automated: Integration test suites, CI/CD pipeline tests, API contract and smoke tests

Why it matters: Automation scales the checks. Manual testing digs into what automation can't predict.

Now, it's about simulating real-world usage. Automation ensures coverage across browsers, devices, and regression points. Manual testing takes over for areas where user behavior, aesthetics, and unpredictability matter most.

Automated: E2E flows, regression, performance, cross-browser testing

Manual: UI/UX validation, exploratory testing, accessibility, ad-hoc flows

Why it matters: Automation checks if everything works. Manual testing checks if it feels right.

Once live, quality assurance doesn't end. Automated monitoring handles uptime, errors, and response times. Manual testing supports user feedback and ensures new features align with business goals.

Automated: Synthetic and health monitoring, continuous regression, performance monitoring

Manual: UAT, business process testing, production validation, beta feedback

Why it matters: Automation keeps systems healthy. Manual input ensures business value and user trust.

Modern software systems, such as microservices architectures or AI-driven applications, involve complex interactions and edge cases. Manual testers often struggle to maintain mental models of these systems.

With rapid release cycles (e.g., daily deployments in CI/CD pipelines), manual testing cannot keep pace. Testers face pressure to validate features across diverse environments (cloud, on-premises, hybrid) and configurations.

While exploratory testing is valuable for uncovering unanticipated issues, it introduces variability due to testers' differing expertise and biases. For instance, a tester's focus on UI aesthetics might overshadow critical backend logic flaws. Standardizing exploratory testing outcomes without stifling creativity remains a persistent challenge.

The proliferation of devices, operating systems, and browser versions (e.g., iOS 18, Android 15, Chrome 130) creates a combinatorial explosion of test scenarios. Manual testing struggles to cover this matrix efficiently, especially for responsive web applications or IoT ecosystems, where real-world usage patterns are hard to simulate manually.

Manual regression testing is prone to oversight, particularly in large applications with frequent updates. Testers may skip or misinterpret test cases due to fatigue or miscommunication, leading to escaped defects. This is compounded in projects with poor test case documentation or high team turnover.

Automated tests often fail intermittently due to timing issues, network latency, or dependencies on external services (e.g., APIs, cloud infrastructure). For example, tests for microservices may pass in a staging environment but fail in production due to subtle configuration differences, requiring sophisticated debugging and maintenance.

Building robust automation frameworks (e.g., using Selenium, Cypress, or Appium) demands significant upfront effort in architecture, tooling, and training. Poorly designed frameworks lead to brittle tests that break with minor code changes, especially in applications with frequent UI updates or legacy codebases.

Automated testing excels at functional validation but struggles with non-functional aspects like usability, accessibility, or performance under real-world conditions. For instance, automating tests for WCAG 2.2 compliance or user experience on low-bandwidth networks often requires manual intervention or specialized tools, increasing complexity.

Automated tests require consistent, realistic test data across environments. Generating and maintaining such data (e.g., synthetic datasets for GDPR-compliant testing) is challenging, particularly for systems with complex data models or regulatory constraints. Data drift between test and production environments further undermines test reliability.

Teams may prioritize automation for high-coverage areas, neglecting edge cases or exploratory scenarios that require human intuition. For example, automated tests for a payment gateway might verify happy paths but miss subtle fraud-related behaviors that a manual tester could identify through contextual analysis.

Manual and automated testing aren't rivals; they're partners. A hybrid approach delivers faster releases without sacrificing quality, pairing the precision of automation with the context only humans can provide. If you're building apps that need to scale and perform under real-world conditions, it's essential to hire mobile app developers who can integrate both seamlessly into your workflow.

Focus on creating modular test frameworks with page object models and implement data-driven testing approaches that separate test logic from test data, making scripts more resilient to UI changes.

Effective mobile test automation requires programming knowledge (typically Java, JavaScript, or Python), understanding of testing frameworks, familiarity with mobile platforms, and knowledge of CI/CD integration practices.

AI-powered testing tools can identify optimal test cases for automation, help maintain scripts through self-healing mechanisms, and augment manual testing by identifying visual inconsistencies that traditional automated tests might miss.

Get In Touch

Contact us for your software development requirements

Get In Touch

Contact us for your software development requirements