COOPERATION MODEL

ARTIFICIAL INTELLIGENCE

PRODUCT ENGINEERING

DevOps & Cloud

LOW-CODE/NO-CODE DEVELOPMENT

INDUSTRY

FRONTEND DEVELOPMENT

CLOUD DEVELOPMENT

MOBILE APP DEVELOPMENT

LOW CODE/ NO CODE DEVELOPMENT

EMERGING TECHNOLOGIES

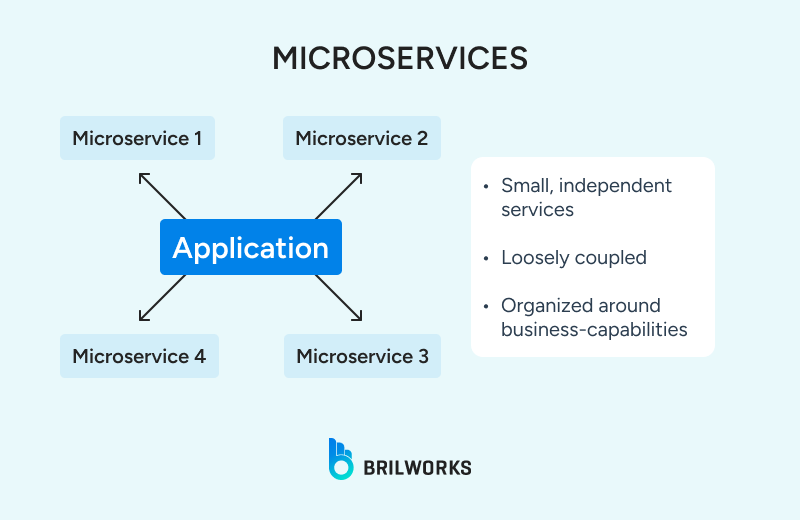

Microservices-based architecture is one of the most popular architectural styles used in modern application development. The application is broken down into small and independent services, and each service can be scaled individually, providing flexibility in resource allocation and deployment.

Solo.io's survey reveals that around 85% of companies plan to adopt microservices-based architectures as part of their modernization strategy.

The flexibility is the major benefit they provide. They can be developed using legacy-friendly technologies like Java, while modern options such as Node.js are emerging as a popular choice for lightweight applications.

Microservices-based architecture heavily relies on communication between different services, such as API calls, data interaction, and I/O operations. Node.js naturally fits into this setup with a non-blocking I/O model and an event-driven architecture.

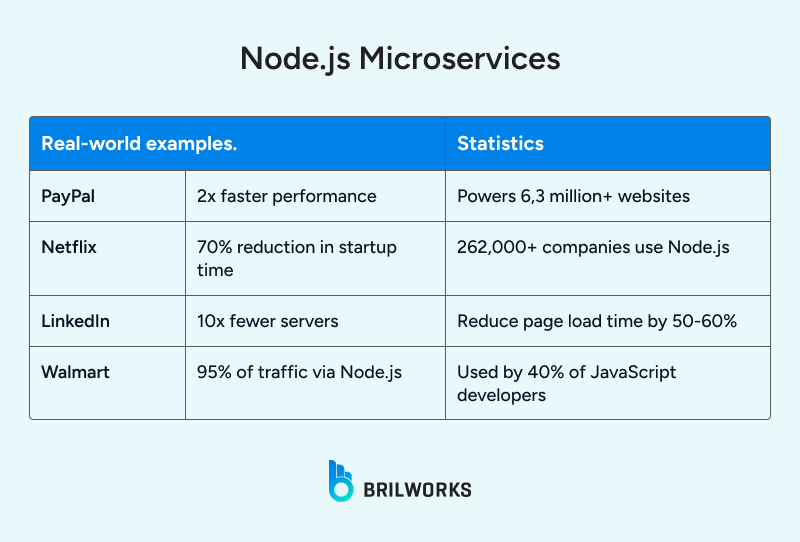

Popular companies like PayPal, Netflix, and LinkedIn have utilized Node.js capabilities to enhance the performance of their platforms. Now, is it the fastest? No, Go will outperform it in raw benchmarks, and Java is still a favorite choice for enterprise-grade applications. But Node gives you a velocity. You can ship faster and iterate more often, and in a microservices development environment, speed matters more than perfection.

This perspective is also backed by the survey conducted by Solo.io, which highlights that around 56% of organizations with at least half of their applications on a microservices architecture report more frequent release cycles.

You have decided to break down your monolithic architecture into separate, independent services. That's great. But they don't scale automatically. You will need to ensure the microservices themselves are set up to scale correctly. Sound paradoxical?

However, the very services can hinder scalability goals if they are not set up in a standardized way. Let's have a look at what it means by scalable Node.js microservices.

Each microservice should be responsible for one specific business capability. In practice, that means a Node.js service for user authentication shouldn't also handle product inventory. Keeping responsibilities isolated makes services easier to reason about, test, and evolve without unintended side effects.

Microservices should be independently deployable and scalable. In a typical Node.js microservices setup, you should be able to update or scale the payment service without touching the cart or user services.

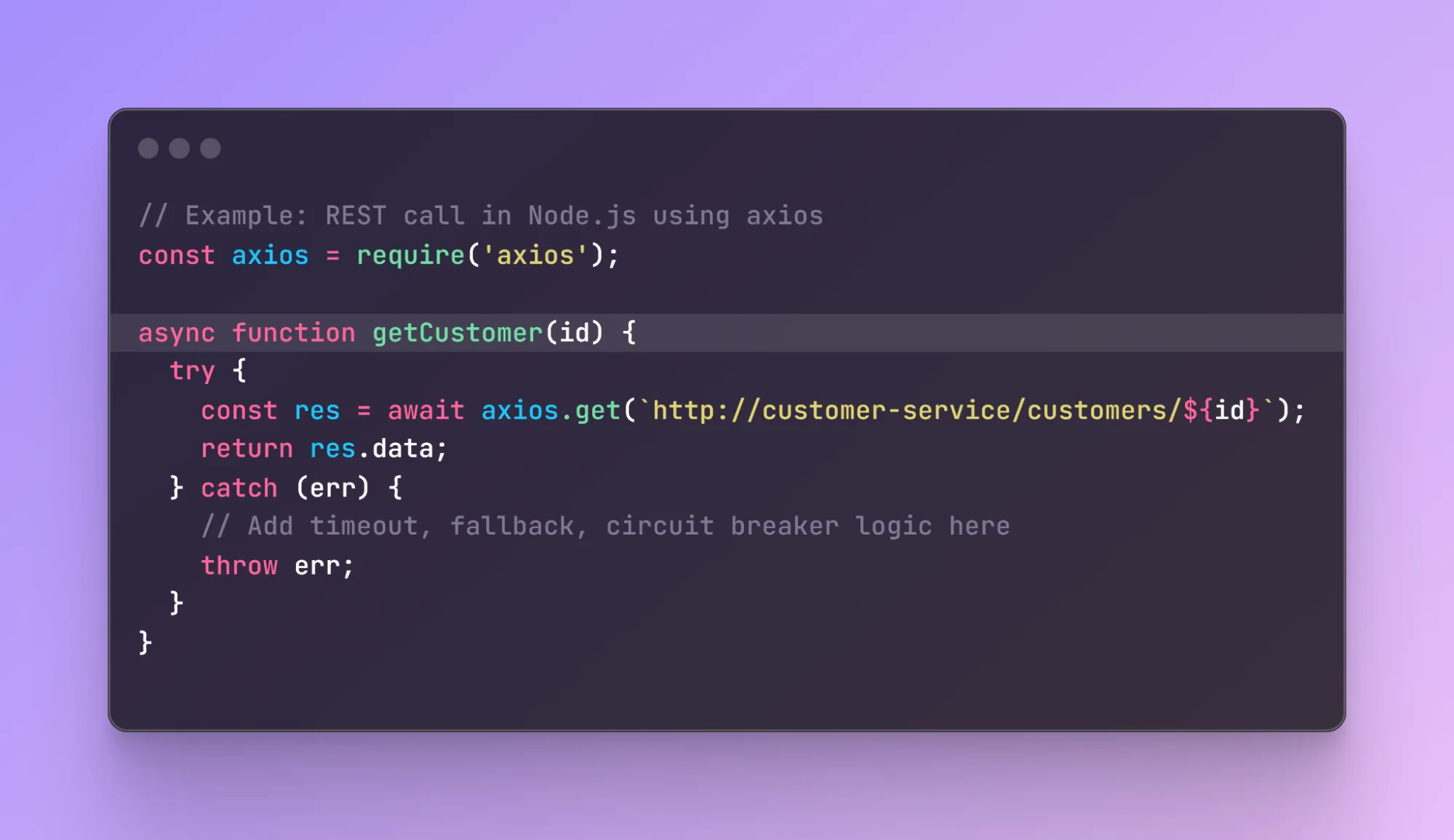

Distributed systems fail; it's a matter of when, not if. Microservices built with Node.js should incorporate resilience patterns like retries, circuit breakers, and graceful fallbacks. Node's asynchronous nature helps with non-blocking operations, but developers still need to handle edge cases where dependencies are slow or unresponsive.

Services rarely work in isolation, so communication patterns play a central role. Synchronous calls (such as REST or gRPC) are common in microservices Node.js applications, but they can also lead to tight coupling. Therefore, it is advisable to use asynchronous messaging for better handling of traffic spikes.

You can't build microservices at scale with just Node.js alone. You will need the right frameworks, libraries, and runtime tools. Making the right picks early on can save hours of refactoring later on.

So, how do you decide what to use? Here's a side-by-side comparison of the most common Node.js frameworks, breaking down their strengths and use cases. Plus, we will briefly compare some of the most popular Node js frameworks across the dimensions that matter most.

Express is still the default for many teams. It is simple and unopinionated and doesn't impose much structure.

When we use it

Fastify, a name that indicates, is known for its impressive performance. It provides a schema-based approach to help with both validation and speed.

Best for

Inspired by Angular, NestJS is becoming popular among large development teams for building complex services. It is opinionated in a way that's helpful for onboarding and testing.

Best for

Koa was created by the same people behind Express, but it aims to be more minimal. It uses async/await natively and doesn't bundle middleware, giving you full transparency over request handling.

Best for

Inspired by Ruby on Rails, Sails.js emphasizes convention over configuration and ships with an ORM(Waterline), auto-generated REST routes, and WebSocket support.

When to use it:

If you're setting up your system from scratch, it's worth reviewing these Node.js architecture best practices to avoid foundational issues down the line.

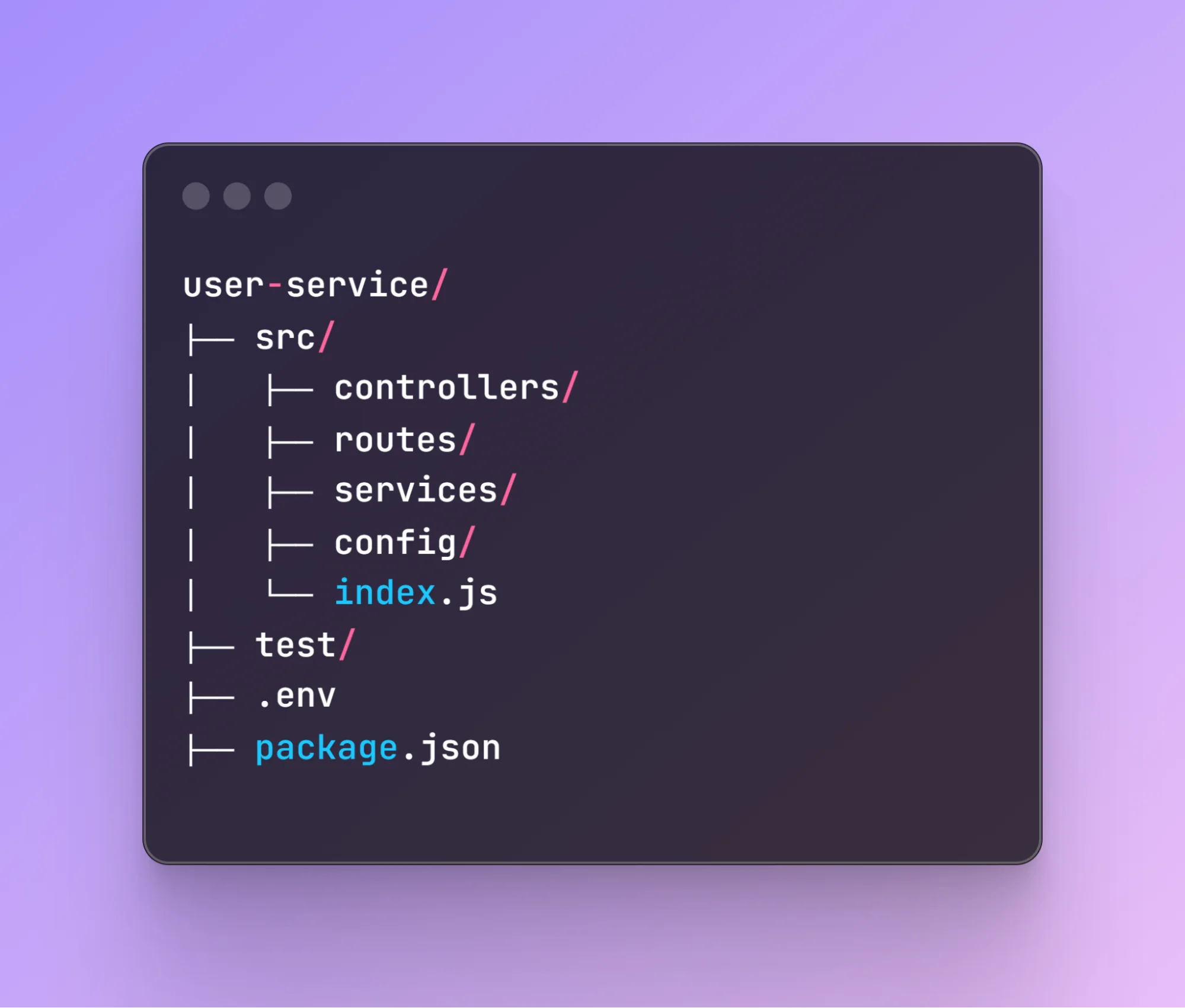

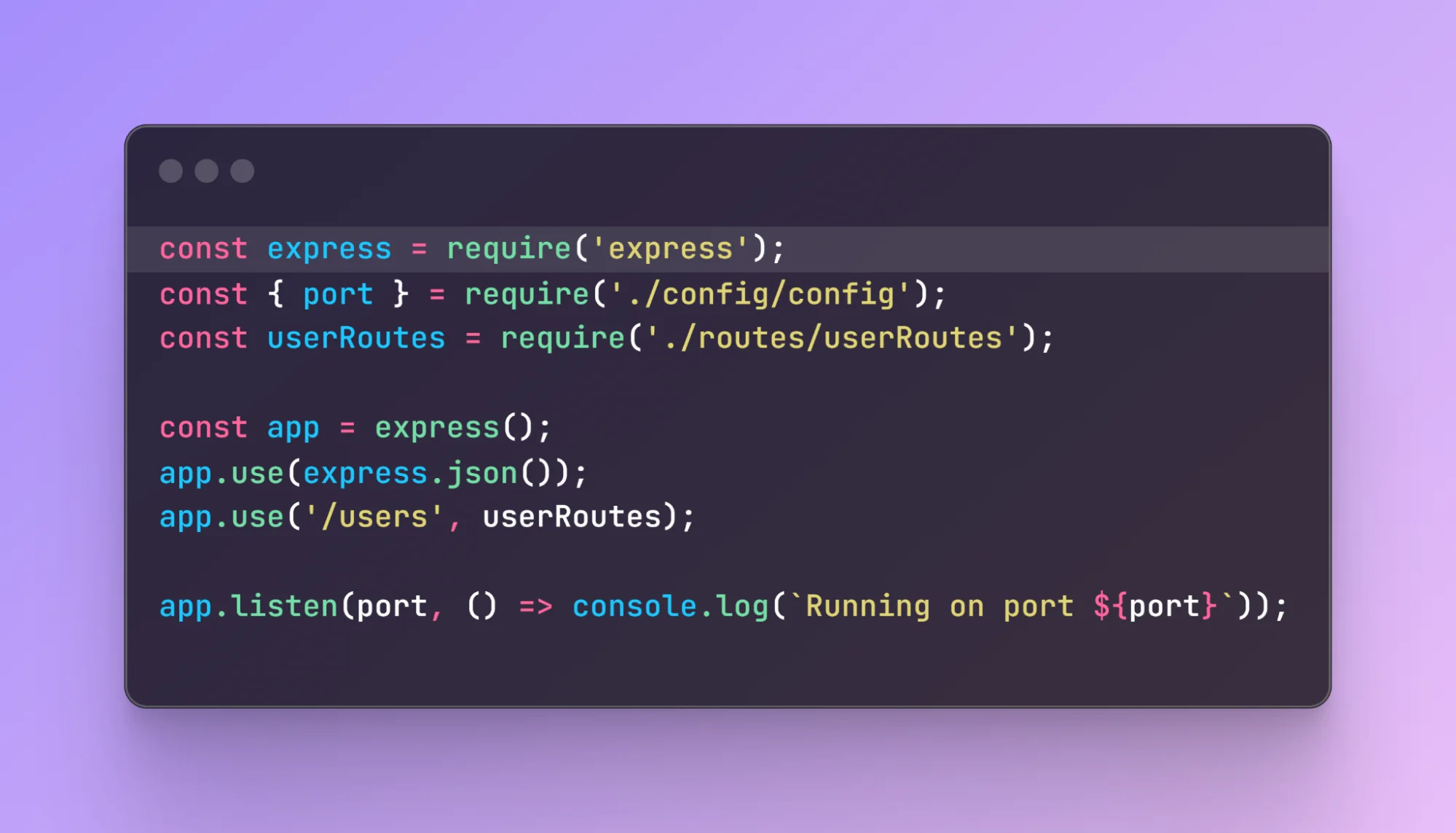

If you're building a microservice in Node.js, start with a simple structure.

Let's say we're building a user-service. Begin with the basics:

And a simple package.json might include:

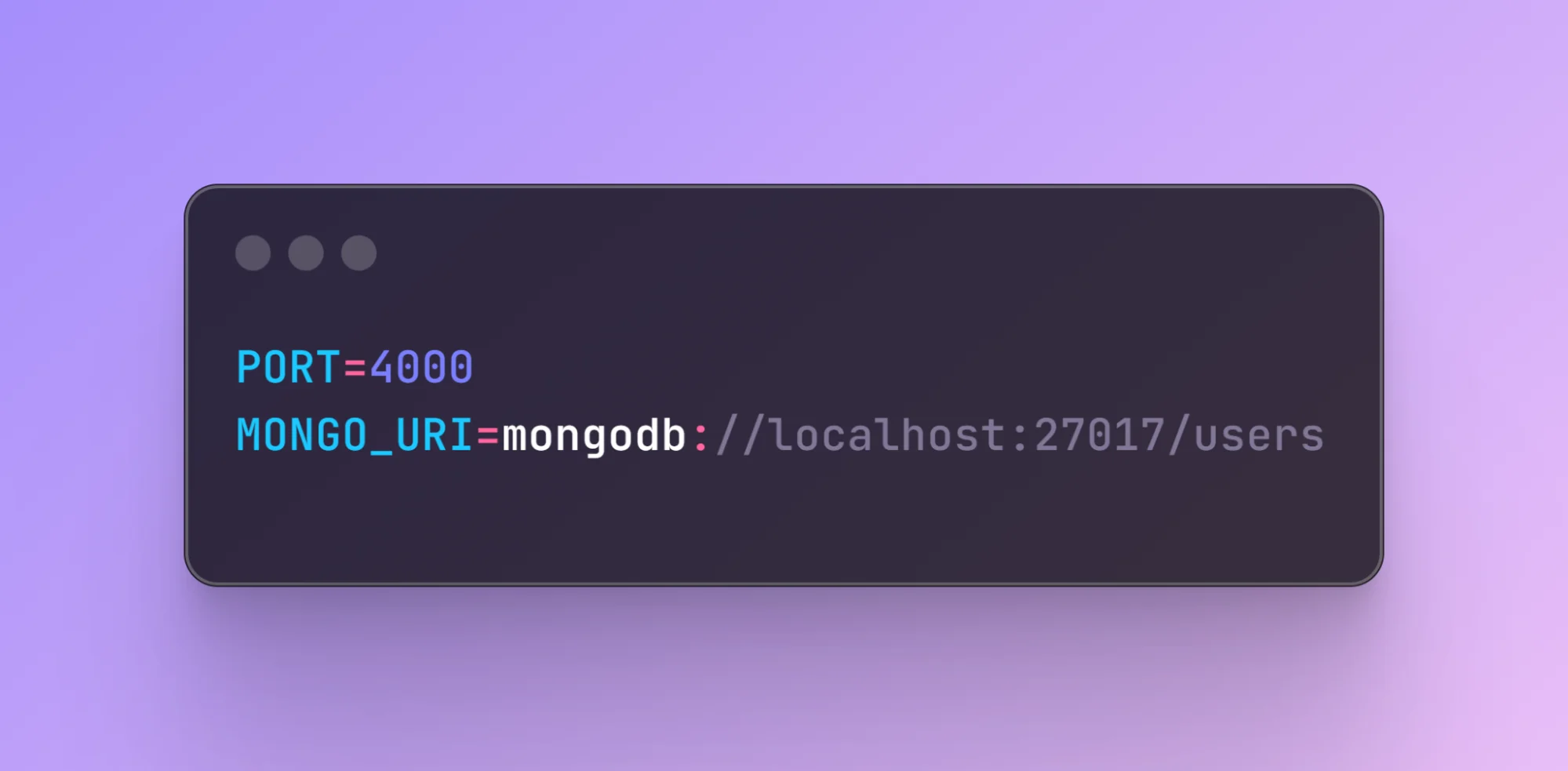

Use .env files to manage configs across dev, test, and prod. Example:

Then load that in your app with:

Now, your config values stay out of version control and can vary per environment.

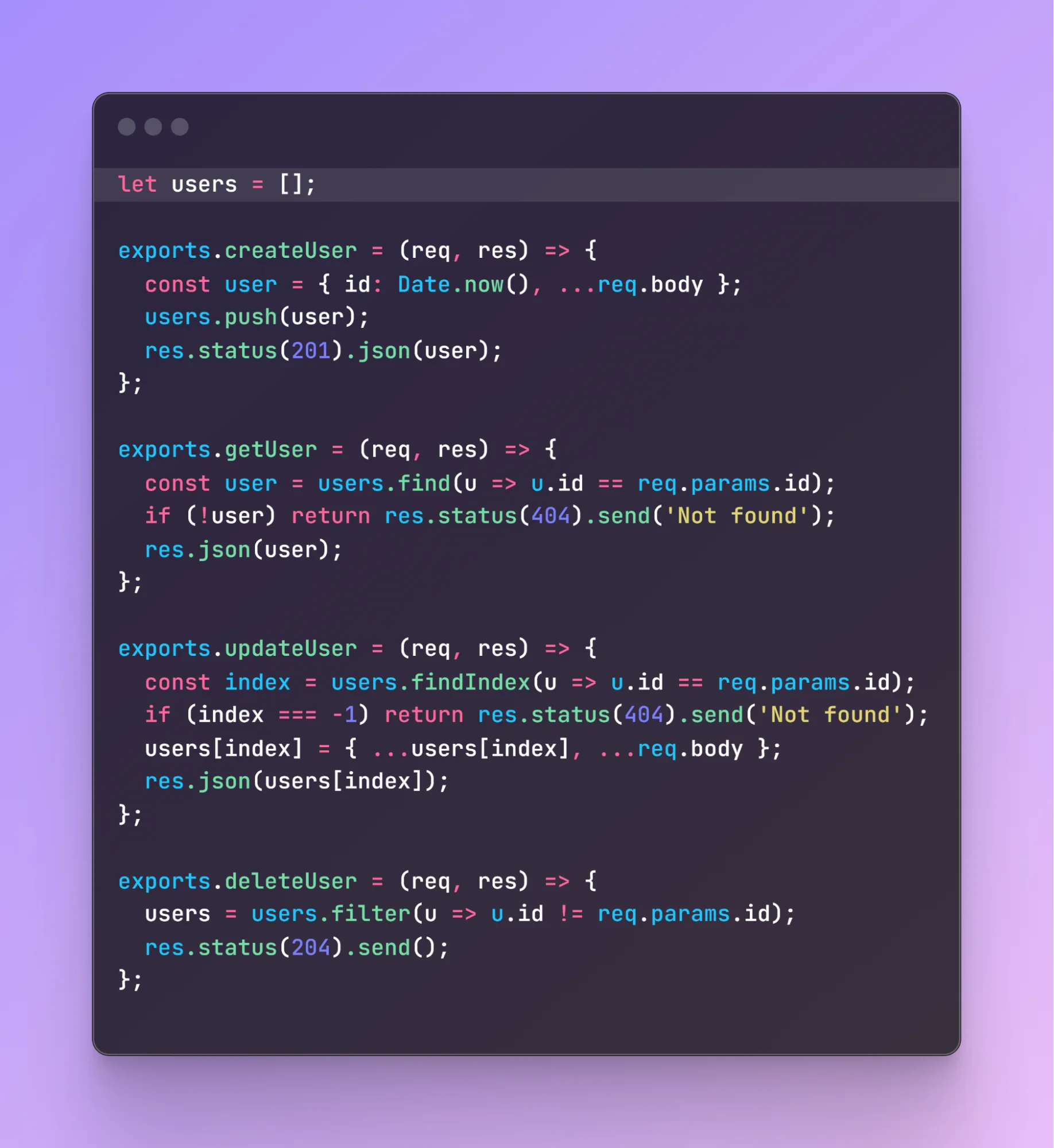

Here's a simple in-memory example to handle user data.

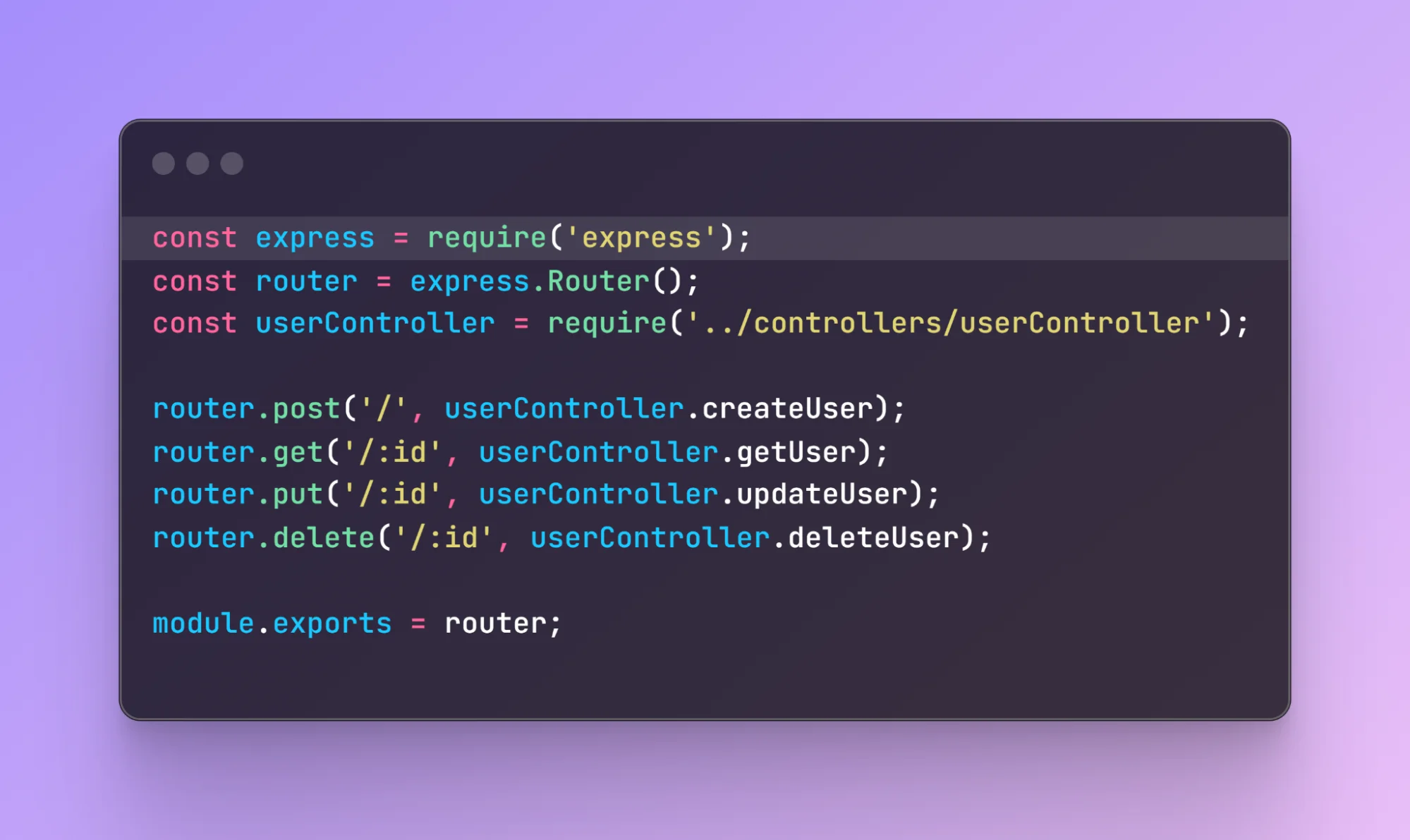

src/routes/userRoutes.js:

src/controllers/userController.js:

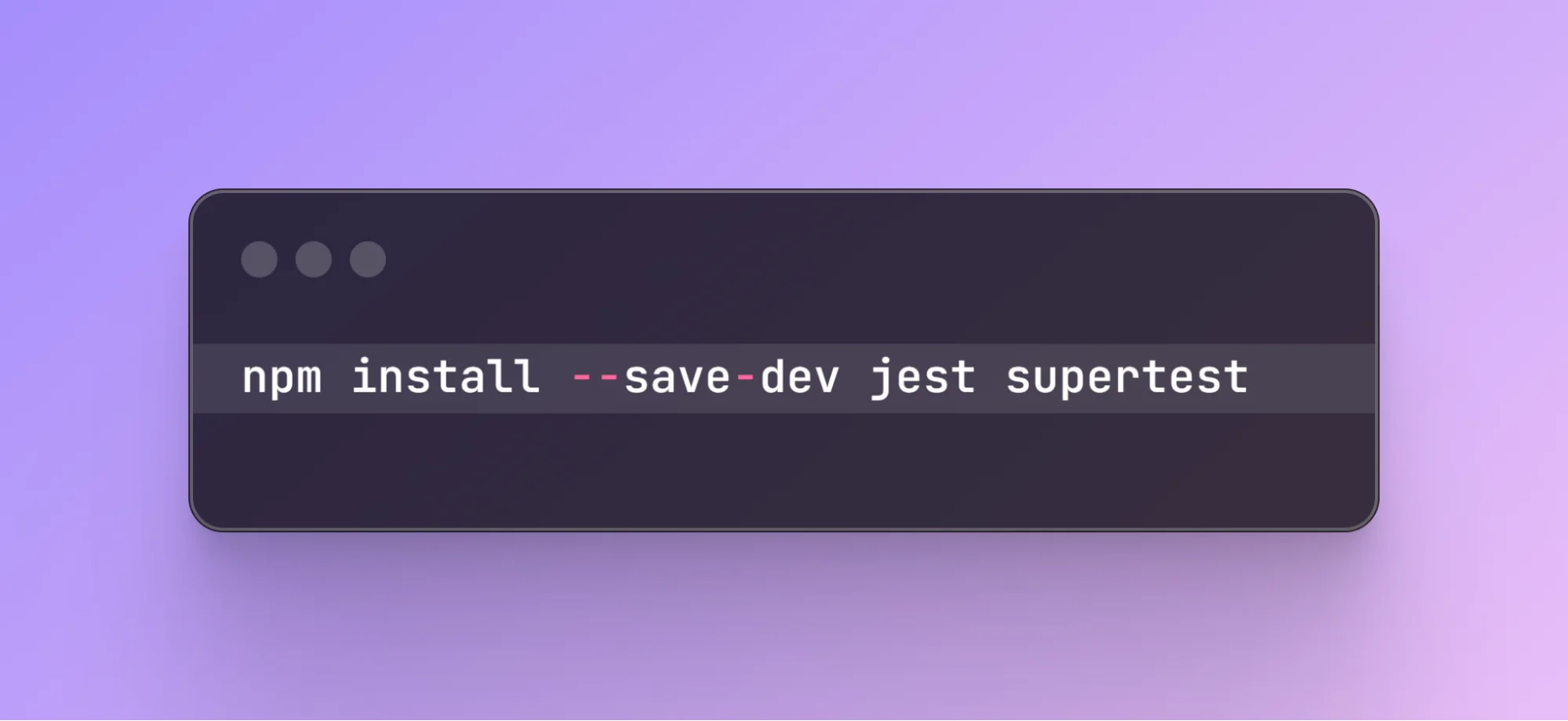

Set up test dependencies:

Write a simple test in test/user.test.js:

Run tests with:

Furthermore, the code coverage tools for Node.js can help maintain high-quality code.

In a microservices architecture, distributing services also means distributing data ownership. In this setup, services coordinate throughout the system while owning their data independently. This is challenging. Here's how you can overcome this challenge.

The idea of "a database per service" isn't new, but it's what keeps microservices from becoming just distributed monoliths. Each service owns its data. That's the boundary.

In practice, this means the orders-service writes to its own Postgres instance. The payments-service might use DynamoDB or even a key-value store like Redis for low-latency access. Why? Because the shape and speed of data access differ between teams, and that's the whole point.

At Shopify, they adopted this pattern early on to avoid the classic trap: a central database with too many hands. It helped them scale teams, not just systems.

But the tradeoff is obvious. You lose the ability to make joins across services. So, instead, teams expose just enough through APIs or event streams to make others aware of relevant data changes. It's slower, yes, but safer. And safer wins at scale.

There's a moment in every microservices setup where a business flow touches multiple services. Order created. Payment charged. Inventory updated. You could try to coordinate it all with distributed locks and two-phase commits. Most teams don't.

Instead, they use Saga patterns, which break the work into steps. Each step completes locally, and either triggers the next or triggers a rollback.

Take an e-commerce flow:

Once services are isolated and doing their jobs, the next layer is how they talk to each other. Some need fast, direct responses. Others just want to send a signal and move on.

REST APIs are often the first thing teams reach for, and for good reason. They're familiar, flexible, and easy to debug. If order-service needs to fetch customer data, a simple GET /customers/:id does the job.

The benefit is predictability. You call a service, you get a response. That makes sense when services need to validate something immediately, such as authentication checks, inventory lookups, or pricing rules.

But that tight loop also means coupling. If user-service is down, any service depending on it might hang unless you've built in timeouts and fallbacks. It's fast, but brittle under pressure.

If your services don't need to wait for each other, messaging makes the system more fault-tolerant. Instead of calling another service directly, a message gets published to a queue. The receiving service can handle it on its own time. That's the core of event-driven architecture. Tools like RabbitMQ and Kafka let you decouple services.

A typical pattern might look like this:

This pattern reduces dependencies, but you give up immediacy. There's no guarantee about when (or even if) something gets processed unless you add delivery guarantees (like the outbox pattern) and retries.

Here's how they compare at a high level:

Feature REST (Sync) Messaging (Async)

|

Feature |

REST (Sync) |

Messaging (Async) |

|

Speed |

Immediate |

Eventual |

|

Coupling |

Tight |

Loose |

|

Failure handling |

Needs retries/timeouts |

Built-in retry logic |

|

Observability |

Easier with tracing |

Needs external tools |

|

Use cases |

Auth, lookups, writes |

Notifications, workflows, billing |

In real systems, you'll likely use both. REST where you need quick answers. Messaging where you want flexibility and scale.

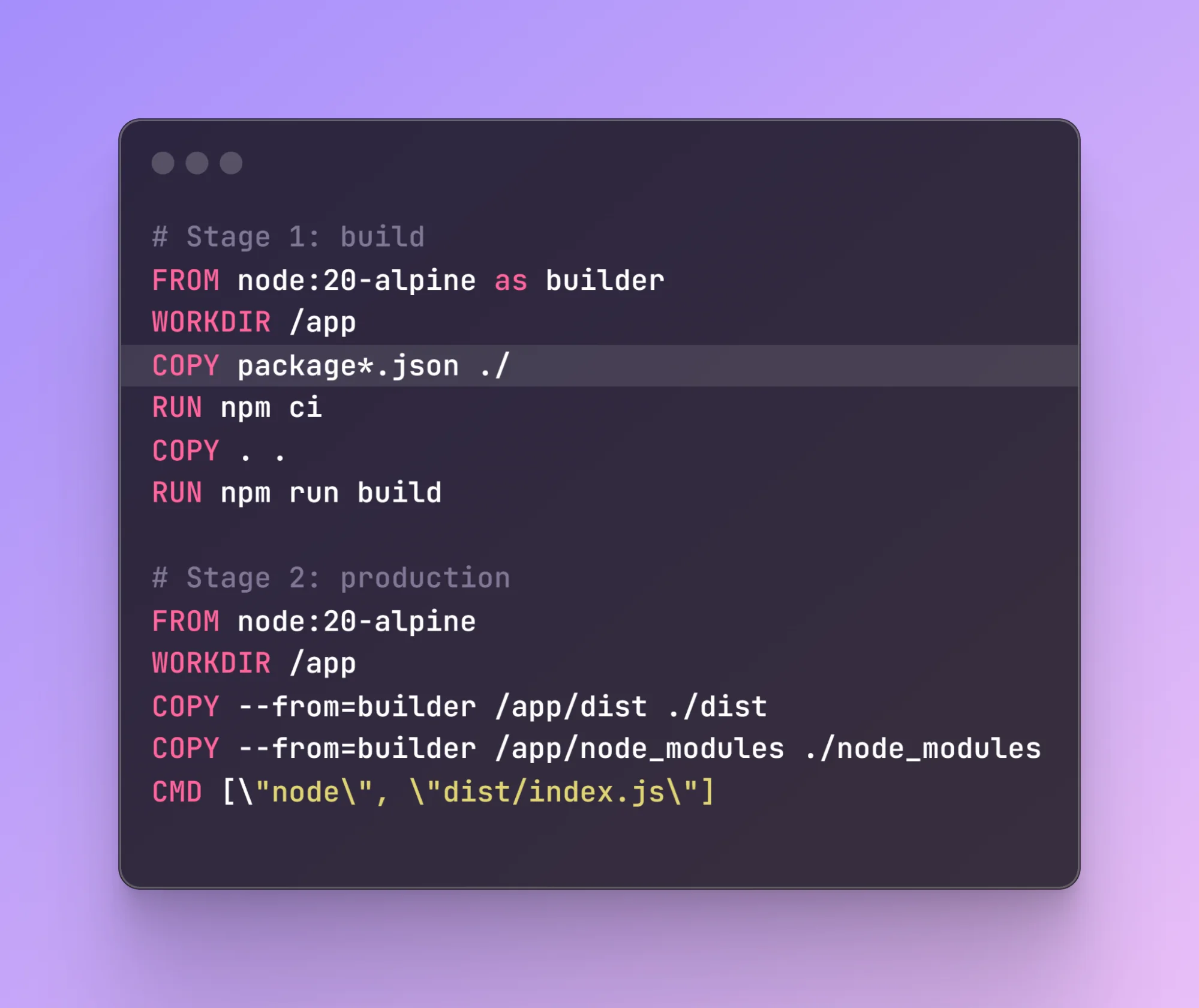

Writing services are one thing, getting them to run reliably in production is another. Once the services are active, you need a way to package them, deploy them, and keep them running. You can use containers and orchestration tools to deploy and orchestrate the services.

Containerization gives you consistency. A service that runs locally should behave the same in staging, production, or CI. Docker makes this practical by wrapping your code, dependencies, and environment into one self-contained unit.

You might also include environment variables or health checks, depending on how your service is exposed. The important part is this: each nodejs microservice should be deployable independently. Docker helps make that separation real.

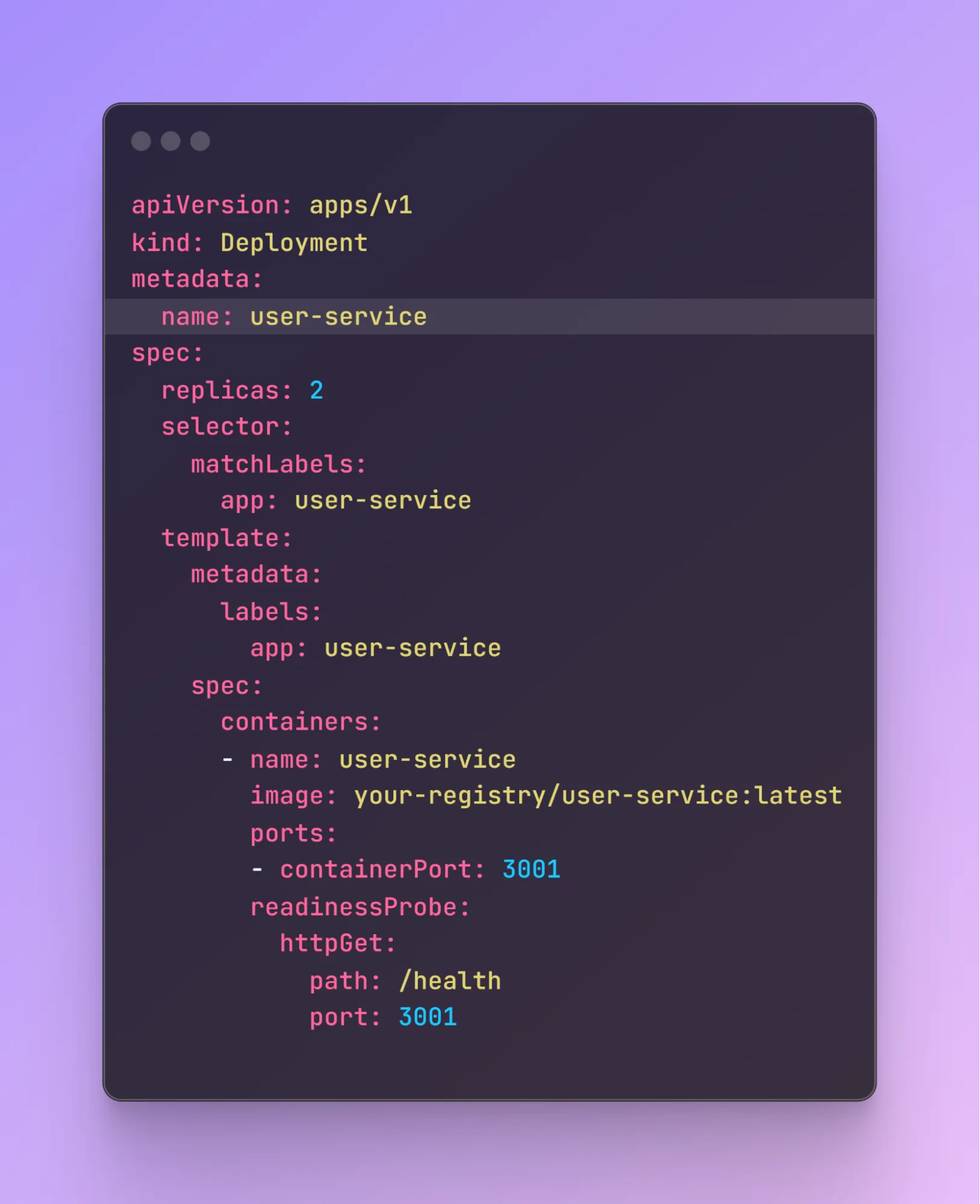

Once services are containerized, orchestration handles the rest, scaling, recovery, and discovery. Kubernetes (K8s) is the go-to option for most teams running microservices in production. With microservices in node, the same principles apply: one container per service, each described with a deployment spec. Here's a simple example for a user-service:

Kubernetes gives you rolling updates, autoscaling, and service discovery out of the box. And when something crashes, it restarts it for you.

Most setups include a CI/CD pipeline that builds your Docker images, pushes them to a registry, and triggers K8s deployments.

Some teams layer in tools like Helm (for templating configs), Argo CD (for GitOps-style rollouts), or Skaffold (for local K8s dev). But those are optimizations. At its core, deploying microservices in node is about owning how each one gets built, run, and recovered. Containers make that repeatable. Kubernetes makes it scalable.

Once Node.js microservices are live and serving traffic, the attention shifts from "does it work" to "how do we know when it breaks, and who's trying to break it?"

Distributed systems generate logs from every corner. If those logs live only inside the container, they're lost when something goes wrong. Follow the logging practices below:

Best practices for logging:

In a Node.js microservice setup, authentication and authorization often happen via JWTs passed between services or through OAuth providers.

Here's how JWT might work in practice:

If you're using OAuth, tools like Auth0 or AWS Cognito can simplify this process. For internal services, mutual TLS or signed service tokens are also common in enterprise setups.

Many teams running microservices with Node.js choose AWS because of its flexibility and vast ecosystem. There are multiple ways to deploy services, depending on the trade-offs:

Teams also lean on tools like CloudWatch, X-Ray, and Datadog to monitor.

As systems grow, workflows stretch across services and teams. Direct HTTP calls don't scale well for that. Instead, teams shift to event-driven coordination using CQRS (Command Query Responsibility Segregation) and Event Sourcing patterns.

With CQRS, services separate reads from writes. One handles commands like "place order," another exposes read-optimized views like "get order history." This improves performance and isolates responsibilities.

Event Sourcing takes it further,state changes aren't stored directly, but as a sequence of events:

Microservices bring clarity and scale, but only when the foundations are solid. With Node.js, teams get the speed and modularity needed to move quickly, but that flexibility comes with architectural trade-offs. From data boundaries to deployment, the decisions made early shape long-term performance and maintainability.

If you're building or scaling microservices with Node.js and want to avoid common pitfalls, the right expertise makes a difference. Whether it's designing communication flows, securing services, or setting up CI/CD pipelines, working with developers who've done it before matters.

Looking to hire Node.js developers with hands-on microservices experience? We've helped engineering teams go from scattered services to clean, scalable systems. Let's connect and explore how we can assist you.

Deploying Node.js microservices in a multi-cloud setup involves running redundant instances across cloud vendors (e.g., AWS, GCP, Azure) behind a global load balancer like Cloudflare or AWS Global Accelerator. Use service discovery, replicated databases, and automated failover to ensure traffic is rerouted instantly during provider outages.

Tools like Jaeger, Zipkin, and OpenTelemetry help trace requests across services by capturing latency data, request IDs, and context propagation. These tools provide visual trace maps and help pinpoint bottlenecks or failures in complex, distributed Node.js environments.

Node.js excels in I/O-bound microservices due to its non-blocking event loop, allowing it to handle many concurrent requests with fewer resources than thread-based models like Java or Python. However, it’s less suited for CPU-bound workloads compared to languages like Go or Rust.

A common pattern is the “you build it, you run it” model, where small cross-functional teams own services end-to-end—design, development, deployment, and monitoring. This approach promotes accountability, faster iteration, and clearer service boundaries.

Start by identifying self-contained modules (e.g., auth, payments) and extracting them behind API gateways while keeping the monolith operational. Over time, shift traffic, isolate databases, and retire monolith code incrementally, testing each service independently as it’s introduced.

Get In Touch

Contact us for your software development requirements

Get In Touch

Contact us for your software development requirements