COOPERATION MODEL

ARTIFICIAL INTELLIGENCE

PRODUCT ENGINEERING

DevOps & Cloud

LOW-CODE/NO-CODE DEVELOPMENT

INDUSTRY

FRONTEND DEVELOPMENT

CLOUD DEVELOPMENT

MOBILE APP DEVELOPMENT

LOW CODE/ NO CODE DEVELOPMENT

EMERGING TECHNOLOGIES

Serverless computing is quickly moving from a specialized cloud feature to a major tech trend for 2025. If you work in technology, you’ve probably heard the term.

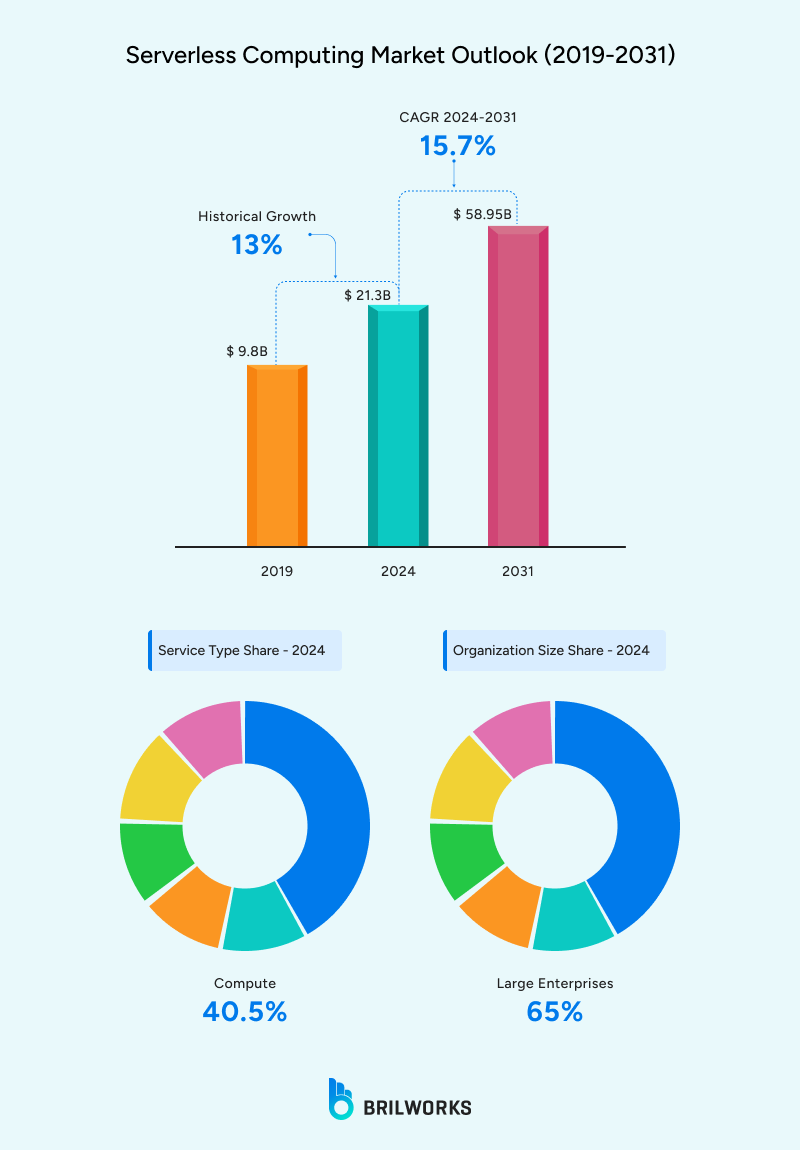

Don't just take our word for it. The data speaks for itself. Google Trends shows that interest in "serverless" is steadily rising. Analysts expect it to grow by about 22.5% each year, jumping from a few billion dollars to around USD 34.5 billion by 2031.

The main driver behind serverless adoption surge is the industry’s move toward cloud-native technologies. As businesses move away from on-premise data centers and adopt the public cloud, they want greater efficiency. The journey has gone from physical servers to virtual machines, then to containers, and now to serverless.

Industries such as finance, healthcare, and e-commerce are adopting serverless computing. Their goals are simple: improve flexibility. This growth is further pushed by advancements in cloud technology, supportive government policies, and a digital transformation that is reaching every part of the economy.

It’s not only large companies like Netflix or Coca-Cola making the move. Many small and medium-sized businesses are switching to serverless, too. Serverless allows them to build and run scalable applications without the stress of managing infrastructure.

In this article, we’ll explore why agile development teams are choosing serverless computing in 2025 and how it fundamentally changes their entire development process for the better.

Let's clear this up first. The name "serverless" is a bit of a misnomer. Of course, there are still servers. They're just not your servers. You don't see them, you don't manage them, and you definitely don't have to patch them.

It's a cloud computing model where you write your application code as individual functions. You upload these functions to a cloud provider like AWS Lambda, Google Cloud Functions, or Azure Functions, and they simply... wait. They run automatically only when they are needed, in response to specific events.

Functions trigger on-demand. An "event" can be anything: a user uploading a photo, an API request from a mobile app, a new row being added to a database, or even just a scheduled time (like running a report every Friday at 5 PM).

The cloud provider handles everything else. All the infrastructure, scaling, and maintenance is 100% managed by AWS, Google, or Microsoft. If one person triggers your function, it runs once. If 10,000 people trigger it at the exact same second, the provider instantly creates 10,000 copies and runs them all in parallel.

You pay only for actual execution time. This is the most crucial part. You are billed in increments as small as a single millisecond. If your code isn't running, your cost is zero.

Each function runs independently. Every execution is spun up in an isolated, secure container, runs its single job, and then disappears.

This model is a profound shift from traditional hosting, where you rent a virtual server (like an EC2 instance or a DigitalOcean Droplet) and pay for it 24/7/365, whether anyone is using your application or not.

To really understand the reasons, you need to see the "before and after." The traditional model involves capacity planning, which is a stressful guessing game about how much server power you'll need for the next month or year.

Imagine the traditional workflow:

Provision: You rent a server. You pick its CPU, RAM, and storage.

Configure: You SSH in, install the operating system (like Linux), update security patches, install a web server (like Nginx), and set up your database.

Deploy: You upload your code.

Manage: You monitor it 24/7. You set up alerts. If traffic spikes, you either manually log in to upgrade the server (vertical scaling) or have complex "auto-scaling groups" that slowly add more servers (horizontal scaling), which can take several minutes.

Pay: You pay for that server (or servers) every single hour, even when traffic is at 3 AM and no one is online.

Now, here's the serverless workflow:

Write: You write a single function (e.g., processPayment(paymentInfo)).

Upload: You upload that 100-line code file.

Done.

That's it. When a payment comes in, the function runs. The rest of the time, it costs you nothing.

Here’s a head-to-head comparison:

|

Scaling |

Automatic, instant (from 0 to 1M+ requests) |

Manual or slow (minutes to boot new servers) |

|

Cost |

Pay-per-execution (billed per millisecond) |

Fixed monthly/hourly fees (pay for idle) |

|

Maintenance |

Zero (provider-managed) |

Continuous OS updates, patches, security |

|

Deployment |

Near-instant (upload a zip file or code) |

Slow (days or weeks for full CI/CD) |

|

Idle Costs |

None |

Pay 24/7 regardless of traffic |

Serverless isn't a magic bullet for 100% of workloads. Traditional servers (or their modern container equivalent, Kubernetes) are still the right choice for a few specific scenarios:

Long-running processes: Most serverless functions have a maximum timeout (e.g., 15 minutes on AWS Lambda). If you have a job that needs to crunch data for 8 hours straight, a dedicated server is a better fit.

Applications needing sub-10ms response times: Serverless can sometimes suffer from a "cold start." If a function hasn't been used in a while, it's "asleep." The first request has to "wake it up" (load the code, start the container), which can add a few hundred milliseconds of latency. For high-frequency trading or real-time gaming, this is unacceptable. (Note: Providers are aggressively solving this with features like "Provisioned Concurrency.")

Complex legacy systems: If you have an old monolithic application with tight dependencies on a specific OS version or stateful behavior (where the app expects the server to always be on), it can be incredibly difficult to "lift and shift" to serverless.

For the vast majority of modern applications, the benefits of serverless are overwhelming. It's not just a technical win; it's a massive business and financial win.

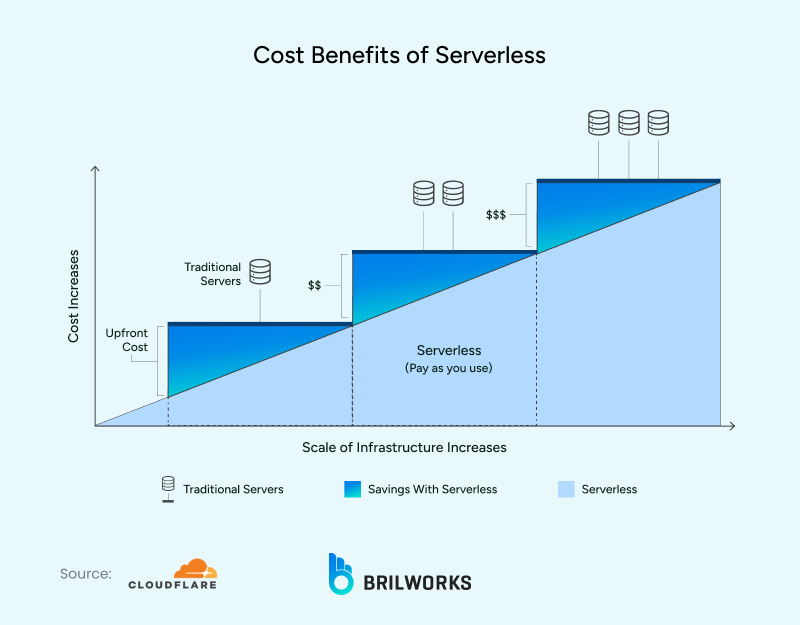

Traditional hosting forces you to pay for peak capacity, 24/7. You have to provision servers for your busiest day (like Black Friday), and that hardware sits 95% idle for the rest of the year, burning money.

With serverless, you pay only when your code is triggered. The Total Cost of Ownership (TCO) plummets.

If your app is featured on the front page of the App Store or a blog post goes viral, a traditional server will melt. Your site goes down, and you lose all that opportunity.

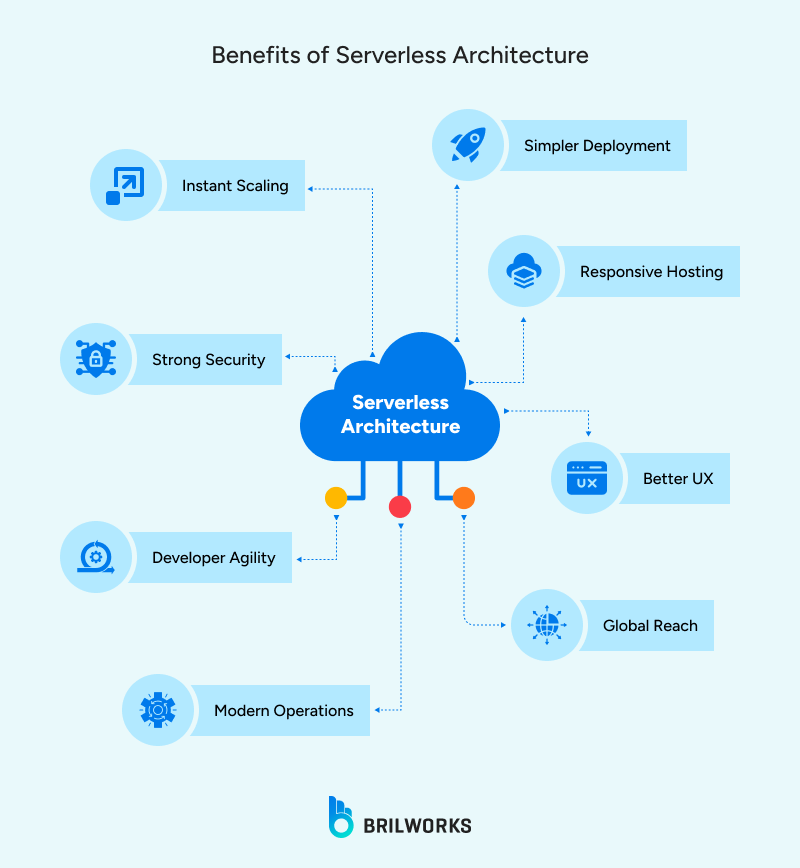

With serverless, your application adjusts automatically. It works whether you have 10 users or 10 million. The cloud provider's global infrastructure is available to you, scaling up to meet demand in milliseconds and, just as importantly, scaling back down to zero when traffic drops.

This is arguably the most important benefit. When developers stop managing infrastructure, they ship features faster. Serverless architectures also encourage teams to use a microservices approach, breaking applications into small, independent functions.

This means a small team can "own" a few functions (like user-signup and password-reset). They can update, deploy, and test their functions dozens of times a day without any risk of breaking the rest of the application.

If you want your application to be fast for users in Singapore, São Paulo, and Stockholm? With traditional hosting, you'd have to manually set up, configure, and manage data centers in Asia, South America, and Europe. This is a complex undertaking.

With serverless, you can deploy functions to edge locations around the world with a simple setup (using services like AWS Lambda@Edge or Cloudflare Workers).

Cloud providers invest billions of dollars per year in security infrastructure. When you go serverless, all of that becomes your security infrastructure, automatically.

This includes:

DDoS protection

Automatic OS and security patching

Data encryption at rest and in transit

Compliance certifications for standards like SOC 2, HIPAA, and GDPR (a massive win for finance and healthcare)

Your security responsibility is reduced. The provider secures the "cloud," and you just have to secure your "code" (e.g., writing secure code and managing function permissions). This significantly reduces your application's attack surface.

The market is dominated by a few key players, each with its own strengths.

Best for: Enterprises, complex workflows, and teams already on AWS. As the first major FaaS provider, it's the most mature, has the largest community, and boasts the deepest ecosystem with 200+ native service integrations. If you need your function to talk to any other AWS service (S3, DynamoDB, SQS), it's a seamless, first-class experience.

Pricing: Has a generous free tier (first 1 million requests free every month, forever).

Best for: Startups, modern dev teams, and AI/data-heavy apps. GCF is known for its clean API and being incredibly fast to get started. Its key differentiator is its superior, native integration with the Google ecosystem, especially Firebase, BigQuery, and its AI/ML platforms. If you're building a data pipeline or a smart mobile app, it's a natural fit.

Pricing: Also has a very generous free tier (first 2 million requests free monthly).

Best for: Microsoft-focused organizations and enterprise .NET shops. If your company runs on Windows Server, SQL Server, and Active Directory, Azure Functions is the path of least resistance. It offers seamless integration with these enterprise tools. Its killer feature is "Durable Functions," an extension that makes it much easier to build complex, stateful workflows (e.g., a multi-step order approval process) in a serverless way.

Pricing: Strong free tier, integrated with the wider Azure ecosystem.

Best for: Global applications needing ultra-low latency and edge logic. This one is different. It doesn't run in a "region" (like us-east-1); it runs in all 300+ of Cloudflare's edge locations around the world. It's built on a different technology (V8 Isolates, not full containers), which allows it to have near-zero millisecond cold starts. It's perfect for tasks that need to be fast everywhere, like validating API requests, modifying content at the CDN level, or running A/B tests.

Don't pick in a vacuum. The decision almost always comes down to your existing cloud investments (where does your data live?), your team's expertise (are you a .NET shop or a Python/Node.js shop?), and your specific integration needs.

You don't need to rewrite your entire company's software overnight. In fact, you shouldn't. The best approach is a gradual, strategic migration.

Look at your current infrastructure and, more importantly, your costs. What services are costing you the most to run?

Find the "low-hanging fruit." The ideal first candidates are stateless, non-critical, and event-driven.

Examples: The backend for your "Contact Us" form, an image processor that creates thumbnails when a user uploads a photo, a scheduled job (cron) that cleans up a database, or a webhook receiver that gets notifications from Stripe or Slack.

Pick one low-risk, high-impact function from your list.

Build it using a tool like the Serverless Framework or AWS SAM to manage your configuration. Deploy it to a development/staging environment.

Load test it. Try to break it. Set up your monitoring before you go live (e.g., using Amazon CloudWatch or Datadog).

Armed with data from your successful pilot, start migrating adjacent services.

Now is the time to build your "serverless playbook."

Look at your monitoring data. Are "cold starts" a problem? If so, consider "provisioned concurrency" to keep high-priority functions "warm."

In serverless, you trade memory for CPU power. Giving a function more RAM also gives it more CPU, making it run faster (and often cheaper, since it runs for less time). Tweak these settings to find the cost/performance sweet spot.

Start exploring patterns like Event Sourcing (storing a log of events instead of just the final state) or CQRS, which are a natural fit for serverless.

Start small, measure everything, and never try to migrate a complex, monolithic application all at once.

This trend is still accelerating, and the next few years look even more exciting.

AI Integration at Scale

Edge Computing Everywhere

Serverless-Native Databases

is already a multi-billion dollar industry, is projected to reach $124.52 billion by 2034, reflecting its deep and widespread adoption across all enterprise sectors.

Serverless architecture is more than just a way to save money; it's a fundamental shift in how we build and deliver software. It removes the infrastructure barriers, such as cost, complexity, and risk, that have historically limited innovation and scalability.

In 2025, it is easier than ever to launch an idea with a global audience, scale it instantly to millions of users, and save 50-70% on infrastructure costs while doing it.

Today’s leading companies aren’t just using serverless to cut costs. They are redesigning their systems to be event-driven, resilient, and ready to scale for any challenge. Most importantly, they are shifting their developers’ time away from server management and toward creating value for customers.

Find one non-critical service. It could be an image optimizer, an email notification handler, or a scheduled reporting job that runs at 2 AM. Deploy it as a serverless function this week. Measure the results.

Then, expand strategically. Every month you delay is a month your competitors are pulling ahead with faster development, lower costs, and better scalability. The serverless revolution is here, and it's time to join.

Get In Touch

Contact us for your software development requirements

Get In Touch

Contact us for your software development requirements