COOPERATION MODEL

ARTIFICIAL INTELLIGENCE

PRODUCT ENGINEERING

DevOps & Cloud

LOW-CODE/NO-CODE DEVELOPMENT

INDUSTRY

FRONTEND DEVELOPMENT

CLOUD DEVELOPMENT

MOBILE APP DEVELOPMENT

LOW CODE/ NO CODE DEVELOPMENT

EMERGING TECHNOLOGIES

The landscape of software outsourcing doesn't change overnight, but when you look back over eighteen months, the shifts become impossible to ignore. Teams that were experimenting with AI coding assistants in early 2024 are now running entire sprints where every developer has standardized tooling.

Regions that commanded premium rates two years ago are seeing pricing pressure. And the conversations happening in procurement meetings have fundamentally changed—from "How much per hour?" to "How do we measure actual delivery efficiency?"

These aren't superficial adjustments. Something deeper is reorganizing how companies source development talent, structure engagements, and evaluate what "good" actually looks like when you're building software with distributed teams.

If you're planning technology investments for the next 12-18 months, understanding these underlying currents matters more than knowing this quarter's going rates.

Eighteen months ago, AI in software development meant a few adventurous developers using ChatGPT to debug code or generate boilerplate. By mid-2025, that's no longer the story.

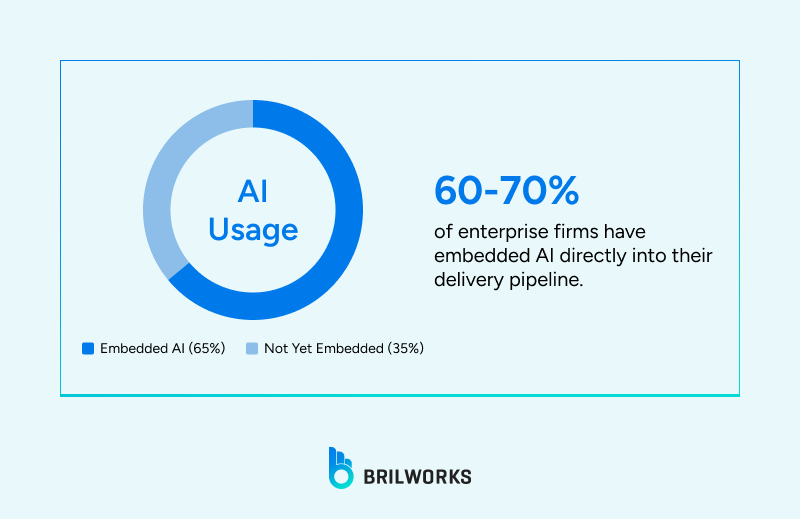

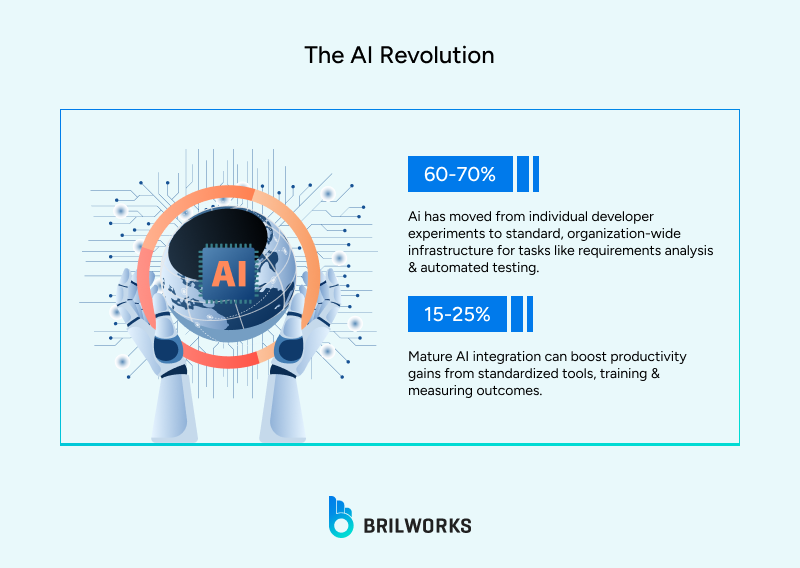

The data from enterprise software firms shows that 60-70% now have AI embedded across their delivery pipeline, not as a side experiment, but as standard infrastructure.

What's changed is the scope of adoption. Teams aren't just using Copilot for code completion anymore. They're running AI-assisted requirements analysis, automated test generation, documentation creation, and even project tracking.

One firm we spoke with described their shift this way: "We used to have engineers experiment individually. Now we have organization-wide standards for which tools are approved, how they're used, and what governance applies."

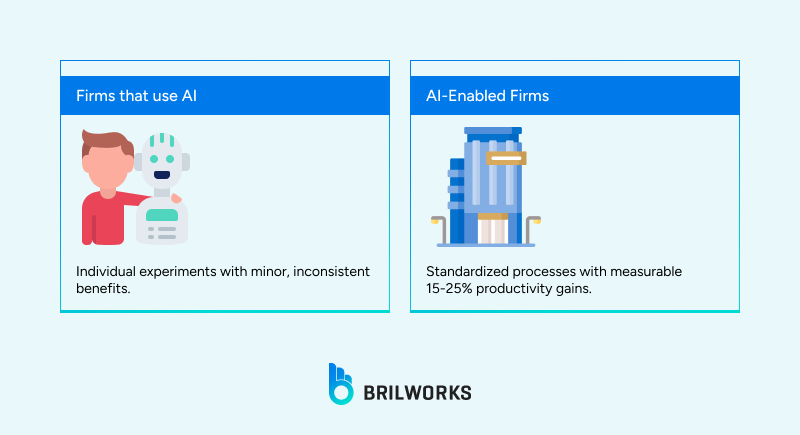

The interesting split is between firms that talk about AI adoption and firms that can actually demonstrate measurable impact. The ones seeing real productivity gains (typically in the 15-25% range) have done the unglamorous work of standardizing tools, training teams, building guardrails, and measuring outcomes.

They track metrics like code quality, defect density, and cycle time before and after AI implementation. The ones still treating it as a loose collection of individual tools report much smaller benefits and considerably more anxiety about IP protection and code quality.

This matters for outsourcing decisions because the maturity gap between "we use AI" and "we have AI-enabled delivery processes" translates directly into project outcomes. A vendor using AI without governance is introducing risk. A vendor with mature AI integration is potentially delivering 20% more throughput with the same team size, which changes the cost equation even if their nominal rates haven't budged.

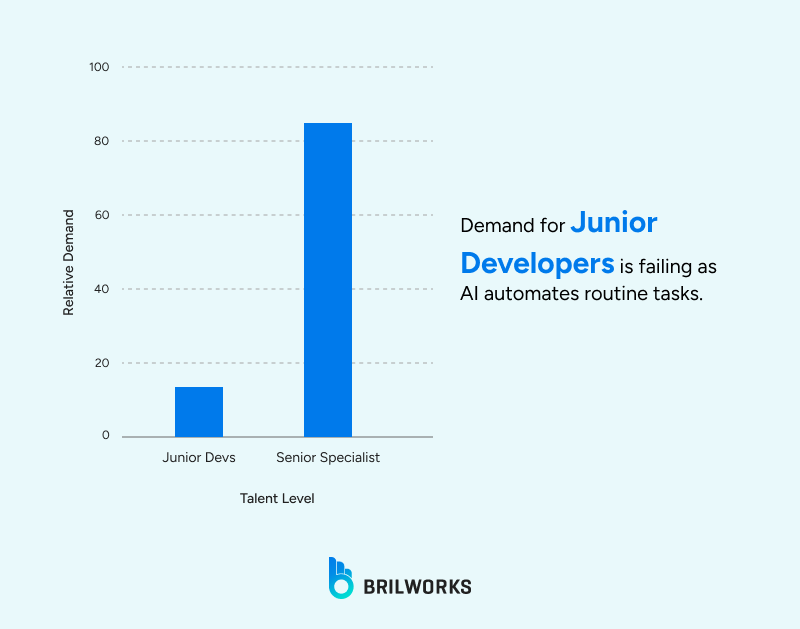

The job market for software talent is experiencing something unusual: simultaneous contraction at the junior level and acute scarcity at the senior specialist level.

Multiple firms across regions report they're hiring fewer entry-level engineers than they did two years ago, largely because AI has automated many of the tasks that traditionally went to junior developers.

At the same time, demand for senior engineers with specific expertise is intensifying. The roles seeing the fastest growth aren't traditional developer positions, they're hybrid roles that blend technical depth with AI fluency or domain specialization.

Prompt Engineer, LLM Integration Specialist, AI Governance Lead weren't real job titles 24 months ago. Now they're showing up in hiring plans across Latin America, Eastern Europe, and South Asia.

What's driving this isn't just AI hype. It's the practical reality that as development teams adopt more sophisticated tooling, the complexity shifts from writing code to architecting systems, ensuring compliance, and making good decisions about what to build and how.

For companies evaluating outsourcing partners, this creates a new evaluation dimension. The question isn't just "Do you have developers?" It's "Do you have developers with the specific mix of domain expertise, AI proficiency, and architectural judgment our project actually needs?" And increasingly, the answer is no, unless the vendor has been actively upskilling their team and hiring for these hybrid roles.

On the surface, global outsourcing rates look stable or slightly declining. Latin America's median rate dropped about 7% year-over-year, Asia fell roughly 8%, and Europe declined around 4%. That sounds like good news for buyers, more capacity at lower prices.

The reality underneath those numbers is more textured. In Latin America, the rate decline reflects a specific dynamic: minimum wage increases across several countries pushed entry-level costs up, while senior developer salaries cooled after years of rapid growth.

The result is margin compression for vendors, and smart buyers are watching how firms respond. Are they cutting corners on quality? Reducing training budgets? Shifting to more junior teams to preserve margins? Or are they maintaining senior talent by becoming more selective about the projects they take?

Asia's rate decline tells a different story. The region, particularly South Asia, has always competed on price, massive talent pools, aggressive competition, and maturing remote work infrastructure keep rates low. But there's increasing bifurcation between commodity development shops and firms investing in premium capabilities.

The vendors competing purely on price are seeing rates compress further. The vendors building specialized expertise in AI, cloud architecture, or regulated industries are maintaining or even increasing rates because they're offering something that pure arbitrage can't deliver.

Europe's smaller rate decline masks significant regional variation. Western Europe remains expensive, but Central and Eastern European hubs --Poland, Romania, Czech Republic -- continue to offer strong value for teams that need process maturity and regulatory fluency.

The complication is geopolitical. The ongoing uncertainty around Ukraine and broader European security concerns is making some buyers hesitant about CEE vendors, despite their technical capabilities and reasonable pricing.

The broader implication is that regional averages are becoming less meaningful. The range within each region, between commodity vendors and specialized firms, is widening.

Choosing a partner based on regional stereotypes (Asia is cheap, and Europe is expensive) misses the real value question: What capabilities do you actually need, and which vendors can deliver them regardless of where they're located?

There's a concept that's been gaining traction in outsourcing evaluation: total cost of development. It's distinct from project cost or hourly rate, and it's become the lens through which sophisticated buyers assess vendors.

Total cost of application development accounts for everything that actually drives expense: requirements quality, team experience, process maturity, communication efficiency, rework rates, defect density, and opportunity cost from delays.

When you model these factors properly, something counterintuitive emerges: the cheapest hourly rate almost never produces the lowest total cost.

The reason is simple. Software development is complex work, and complexity punishes immature processes ruthlessly. A team with weak requirements discipline will build features that get scrapped.

A team with poor testing automation will ship bugs that take weeks to fix.

A team with inadequate communication structure will spend days waiting for answers to simple questions.

All of this burns hours that appear on the invoice but generate no value.

Recent analysis of hundreds of software projects shows that vendors in the lowest rate quartile consistently require 40-60% more total hours to deliver the same scope as higher-rate vendors with mature processes.

Factor in the rework, the delays, the opportunity cost of missed market windows, and the "bargain" rate produces total costs 2-3x higher than the expensive alternative.

The shift happening now is that more buyers are asking vendors to demonstrate process maturity, not just quote rates.

They want to see ISO certifications, CMMI assessments, CI/CD pipeline automation, defect tracking data, and rework percentages. The vendors who can't provide this data are getting filtered out earlier in the evaluation process, regardless of how attractive their hourly rates look.

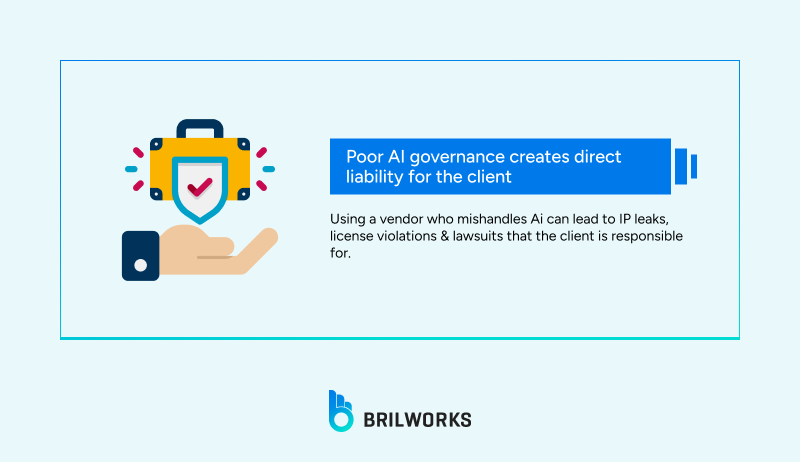

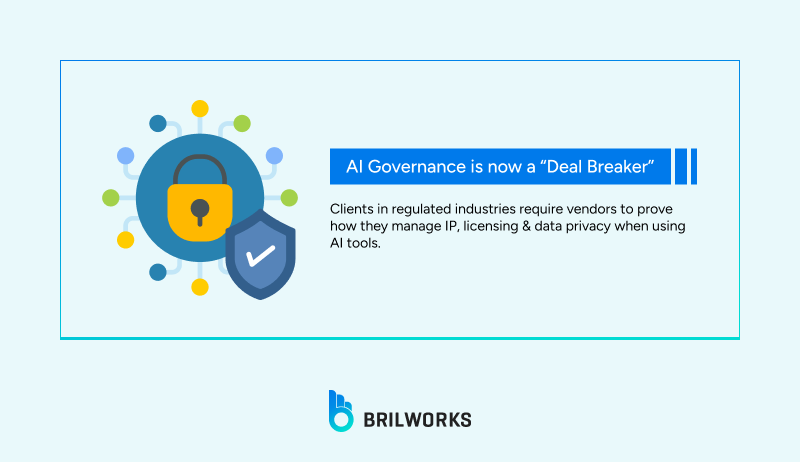

As AI adoption accelerates, governance has moved from "nice to have" to "deal breaker." Firms building AI-enabled products or using AI in their delivery process are facing tough questions from clients and regulators: Where does your training data come from?

How do you ensure AI-generated code doesn't violate licenses? What happens to sensitive IP when it passes through AI tools? How do you audit AI decisions for bias or errors?

The vendors ahead of this curve have built comprehensive governance frameworks. They enforce code provenance, tracking where every line of code originates. They run automated license scanning in CI/CD pipelines to catch violations before deployment.

They use private or contractually protected AI environments for sensitive client work, never feeding proprietary code into public models. They document everything, conduct regular bias and risk assessments, and maintain transparency with clients about what AI is doing and what humans are reviewing.

The vendors behind the curve are winging it. They've given developers access to AI tools without clear policies about what's permitted, how to handle sensitive data, or how to validate AI output. Some don't even realize they're creating compliance risk. they genuinely believe that if the AI wrote it, it's automatically safe to ship.

This governance gap is becoming a vendor selection filter. Companies in regulated industries are explicitly asking about AI governance in RFPs. They're requiring vendors to demonstrate compliance with frameworks like GDPR, HIPAA, and emerging AI-specific regulations. Vendors without clear answers are getting disqualified regardless of technical capabilities or pricing.

Even companies in non-regulated industries are paying attention, because the IP risks are real. If your vendor uses AI carelessly and ships code that violates someone else's license, you own that problem.

If they expose your proprietary algorithms to a public AI model that uses them for training, your competitive advantage just got leaked. The lawsuits are already happening, and the pattern is clear: poor AI governance creates liability that flows upward to the client.

One of the quieter but more consequential trends in outsourcing is the growing sophistication around estimation. The best vendors are no longer just quoting hours based on a brief requirements doc, they're using structured methodologies that account for solution complexity, ecosystem integration, team experience, and built-in contingency.

Good estimation matters because software projects live or die based on whether expectations align with reality. Underestimate by 30%, and you're either going back to ask for more budget (which damages trust) or cutting scope and quality to hit the number (which damages the product). Overestimate significantly, and you've misallocated capital that could have gone to other priorities.

The vendors doing this well use quantitative models that incorporate hard data: team composition, time zone overlap, technology stack risk, integration complexity, and historical productivity metrics.

They also factor in qualitative assessments: how well-defined are the requirements, how experienced is the client team, how mature is the collaboration process. Then they model contingency (typically 15-20% for well-scoped projects, rising to 30-35% for ambiguous or high-risk initiatives).

What separates this approach from traditional estimation is that it produces apples-to-apples comparisons. You can benchmark an internal team against an offshore vendor, or compare two vendors in different regions, and see not just the nominal cost difference but the total cost including all the hidden inefficiencies.

This level of transparency is uncomfortable for vendors who've relied on lowball estimates to win deals, but it's enormously valuable for buyers trying to make rational decisions.

The broader pattern is that as AI and automation make the actual coding faster, the premium shifts to the things that can't be automated: clear thinking about requirements, smart architectural decisions, realistic planning, and effective collaboration.

Vendors who've invested in estimation discipline are winning deals even when they're not the cheapest option, because buyers are learning, often the hard way, that cheap estimates are usually wrong estimates.

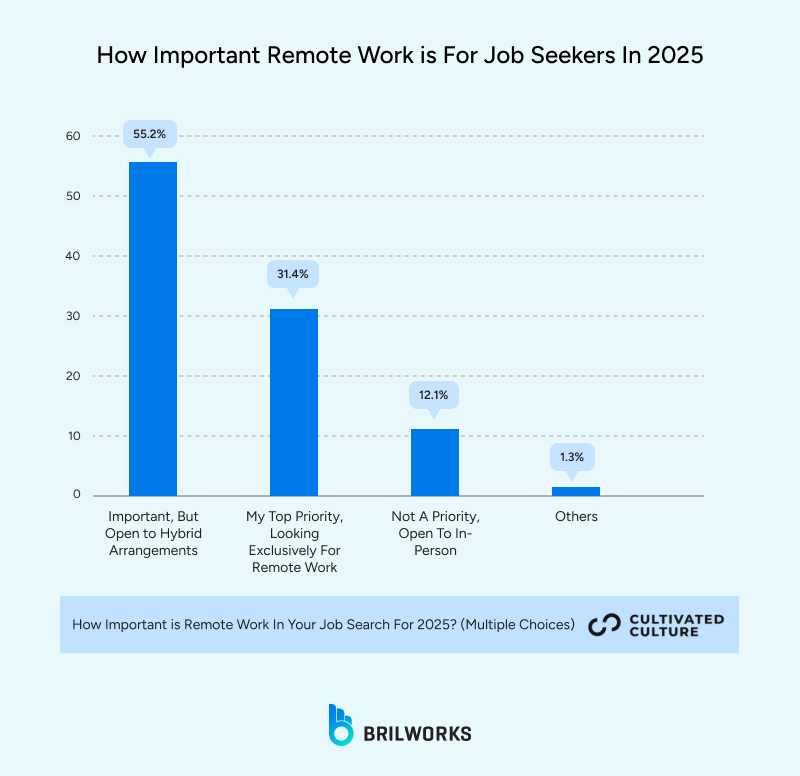

The arbitrage model that built the outsourcing industry is still alive, but it's under pressure from multiple directions. The biggest disruptor is that remote work has gone from experimental to default, and that's flattened access to talent in ways that weren't possible pre-pandemic.

If you're a company in San Francisco, you no longer need an outsourcing vendor to access developers in Mexico City or Krakow or Bangalore. You can hire them directly as remote employees. The platforms facilitating this—Deel, Remote, Oyster—have removed most of the legal and payroll friction that used to make direct hiring impractical. As a result, the pure labor arbitrage play is less compelling than it was five years ago.

This doesn't mean outsourcing is dead, far from it. What it means is that outsourcing vendors need to offer more than just access to cheaper talent. The ones thriving are delivering things that direct hiring can't easily replicate: fully formed teams with established collaboration patterns, proven delivery processes, quality assurance infrastructure, project management discipline, and the ability to scale up or down without the commitment of permanent headcount.

One CTO described the shift this way: "We can hire individual remote developers easily now. What we can't easily do is spin up a cohesive eight-person team with a tech lead, senior developers, QA, and a project manager who've already worked together and know how to deliver. That's what we're buying when we engage an outsourcing partner, not just bodies, but a functional unit with proven dynamics."

The vendors adapting to this reality are emphasizing team cohesion, institutional knowledge, and delivery track record. They're positioning themselves less as staff augmentation and more as strategic capability partners—companies you engage because they can deliver outcomes, not just provide resumes.

The vendors still selling primarily on hourly rate arbitrage are facing commoditization and price pressure as direct hiring and global talent platforms eat into their traditional market.

If you're evaluating outsourcing partners for projects kicking off in the next 12-18 months, the landscape described above creates specific implications for how you structure your search and selection process.

First, lead with the problem you're trying to solve, not the budget you're trying to hit. Define the technical capabilities, domain expertise, and delivery maturity you actually need, then evaluate vendors against those criteria before you even look at pricing.

The vendors who can demonstrate relevant experience, mature processes, and clear AI governance will almost certainly cost more per hour than bottom-tier alternatives, but they'll likely cost less in total and deliver faster with fewer defects.

Second, ask detailed questions about AI adoption—not just whether they use it, but how they've operationalized it. Request specifics: Which tools are standardized? What's your code provenance process?

How do you handle IP in AI-generated code? What metrics have changed since you implemented AI workflows? Vendors with real AI maturity will have concrete answers. Vendors faking it will give you buzzwords and promises.

Third, pressure-test their estimation methodology. Ask them to walk you through how they calculate effort, what assumptions they're making, and what contingency they've built in.

Good vendors will show you their model. Weak vendors will deflect or give you a handwave estimate. The quality of their estimation process is one of the most reliable predictors of whether the project will hit its budget and timeline.

Fourth, evaluate their governance and compliance infrastructure, especially if you're in a regulated industry or dealing with sensitive IP. Ask about certifications, security frameworks, data handling policies, and audit processes. If they can't demonstrate mature governance, walk away—the short-term cost savings aren't worth the long-term risk.

Finally, think in terms of partnerships, not transactions. The vendors who'll deliver the most value over time are the ones you can collaborate with, trust to tell you hard truths, and rely on to adapt as requirements evolve. That relationship quality matters more than squeezing another $5 per hour out of the rate negotiation.

The software outsourcing market in 2026 rewards buyers who go deep on evaluation, ask sophisticated questions, and optimize for total cost and delivery quality rather than sticker price. The vendors who'll succeed are the ones who've invested in AI maturity, process discipline, governance infrastructure, and specialized expertise—and can prove it with data, not just marketing claims. Everything else is noise.

Get In Touch

Contact us for your software development requirements

Get In Touch

Contact us for your software development requirements